Related Stories

When an Algorithm Helps Send You to Prison

Key Excerpts from Article on Website of New York Times

Posted: November 6th, 2017

https://www.nytimes.com/2017/10/26/opinion/algorithm-compas-...

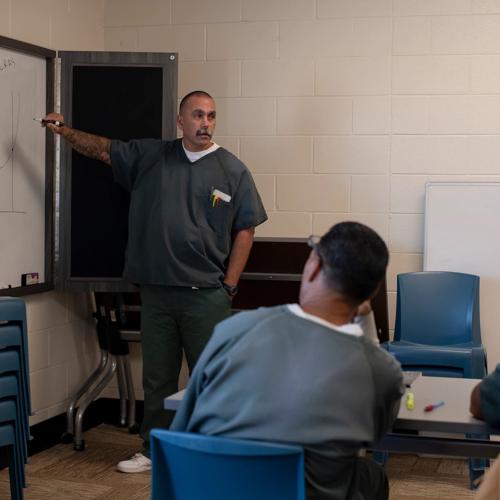

Eric Loomis pleaded guilty to attempting to flee an officer, and no contest to operating a vehicle without the owners consent. Neither of his crimes mandates prison time. At Mr. Loomiss sentencing, the judge cited, among other factors, Mr. Loomiss high risk of recidivism as predicted by a computer program called COMPAS, a risk assessment algorithm used by the state of Wisconsin. The judge denied probation and prescribed an 11-year sentence. No one knows exactly how COMPAS works; its manufacturer refuses to disclose the proprietary algorithm. We only know the final risk assessment score it spits out, which judges may consider at sentencing. Mr. Loomis challenged the use of an algorithm as a violation of his due process rights. The United States Supreme Court declined to hear his case, meaning a majority of justices effectively condoned the algorithms use. Shifting the sentencing responsibility [from judges] to a computer does not necessarily eliminate bias; it delegates and often compounds it. Algorithms like COMPAS simply mimic the data with which we train them. An algorithm that accurately reflects our world also necessarily reflects our biases. A ProPublica study found that COMPAS predicts black defendants will have higher risks of recidivism than they actually do, while white defendants are predicted to have lower rates than they actually do.

Note: For more along these lines, see concise summaries of deeply revealing news articles on judicial system corruption and the erosion of civil liberties.

Related Stories

Latest News

Key News Articles from Years Past