Military Corruption News Stories

Below are key excerpts of revealing news articles on military corruption from reliable news media sources. If any link fails to function, a paywall blocks full access, or the article is no longer available, try these digital tools.

For further exploration, delve into our comprehensive Military-Intelligence Corruption Information Center.

AI could mean fewer body bags on the battlefield — but that's exactly what terrifies the godfather of AI. Geoffrey Hinton, the computer scientist known as the "godfather of AI," said the rise of killer robots won't make wars safer. It will make conflicts easier to start by lowering the human and political cost of fighting. Hinton said ... that "lethal autonomous weapons, that is weapons that decide by themselves who to kill or maim, are a big advantage if a rich country wants to invade a poor country." "The thing that stops rich countries invading poor countries is their citizens coming back in body bags," he said. "If you have lethal autonomous weapons, instead of dead people coming back, you'll get dead robots coming back." That shift could embolden governments to start wars — and enrich defense contractors in the process, he said. Hinton also said AI is already reshaping the battlefield. "It's fairly clear it's already transformed warfare," he said, pointing to Ukraine as an example. "A $500 drone can now destroy a multimillion-dollar tank." Traditional hardware is beginning to look outdated, he added. "Fighter jets with people in them are a silly idea now," Hinton said. "If you can have AI in them, AIs can withstand much bigger accelerations — and you don't have to worry so much about loss of life." One Ukrainian soldier who works with drones and uncrewed systems [said] in a February report that "what we're doing in Ukraine will define warfare for the next decade."

Note: As law expert Dr. Salah Sharief put it, "The detached nature of drone warfare has anonymized and dehumanized the enemy, greatly diminishing the necessary psychological barriers of killing." For more, read our concise summaries of news articles on AI and warfare technology.

The very week the United States’ Defense Security Cooperation Agency notified Congress of a $346 million arms sale to Nigeria, the U.S. State Department also released its 2024 Country report on human rights practices in the West African country. Security forces of Nigeria, Washington’s most significant ally in Sub-Saharan Africa, habitually operate with impunity and without due regard for human rights protection — a key condition for receiving U.S. security cooperation. For example, the report spotlighted the following human rights abuses as ongoing concerns: “arbitrary and unlawful killings; disappearances; or cruel, inhuman, or degrading treatment or punishment; arbitrary arrest or detention; serious abuses in a conflict.” It also claimed that “military operations against ISIS-WA, Boko Haram, and criminal organization targets” often resulted in civilian deaths. Other findings include the use of “excessive force,” “sexual violence and other forms of abuse” by the military. Since the 1950s, the U.S. has been the world’s leading arms-exporting nation accounting between 2019 and 2023 for 42 percent of all global arms exports. Several laws exist ostensibly to regulate and ensure that U.S. security assistance is provided to allies without undermining America’s core values. However, not once have any of the relevant legal provisions conditioning arms sales on respect for human rights and civilian harm concerns been enforced.

Note: Learn about the loophole that allows US to fund child soldiers in countries like Nigeria. For more, read our concise summaries of news articles on military corruption.

While Defense Secretary Pete Hegseth obsesses over the supposed “softening” and “weakening” of American troops, the Pentagon is concealing the scale of a real threat to the lives of his military’s active-duty members: a suicide crisis killing hundreds of members of the U.S. Air Force. Data The Intercept obtained via the Freedom of Information Act shows that of the 2,278 active-duty Air Force deaths between 2010 and 2023, 926 — about 41 percent — were suicides, overdoses, or preventable deaths from high-risk behavior in a decade when combat deaths were minimal. This is the first published detailed breakdown of Air Force suicide data. In 2022, the National Defense Authorization Act mandated the Defense Department to report suicides by year, career field, and duty status, but neither the department nor the Air Force complied. Congress has done little to enforce thorough reporting. From 2010 to 2023, active-duty maintainers had a suicide rate of 27.4 per 100,000 personnel, nearly twice the 14.2 per 100,000 among U.S. civilians — a 1.93 times higher risk. FOIA records show the most common methods were self-inflicted gunshot wounds to the head and hanging. Other methods included sodium nitrite ingestion, helium inhalation, and carbon monoxide poisoning. [The dataset] shows a troubling pattern of preventable deaths that leaders at the senior officer level or above minimized or ignored, often claiming that releasing detailed suicide information would pose a risk to national security. Current and former service members described a fear of bullying, hazing, and professional retaliation for seeking mental health treatment.

Note: Read about the tragic traumas and suicides connected to military drone operators. A recent Pentagon study concluded that US soldiers are nine times more likely to die by suicide than they are in combat. For more along these lines, read our concise summaries of news articles on mental health and military corruption.

President Trump is rattling his saber against Colombian President Gustavo Petro to punish him for accusing the U.S. government of murdering Venezuelan fishermen. Trump warned that Petro that he “better close up” cocaine production “or the United States will close them up for him, and it won’t be done nicely.” Is anyone in the Trump White House aware of the long history of U.S. failure in that part of the world? Colombia remains the world’s largest cocaine producer despite billions of dollars of U.S. government anti-drug aid to the Colombian government. The Clinton administration made Colombia its top target in its international war on drugs. Clinton drug warriors deluged the Colombian government with U.S. tax dollars to deluge Colombia with toxic spray. The New York Times reported that U.S.-financed planes repeatedly sprayed pesticides onto schoolchildren, making many of them ill. At the same time that the Clinton administration was sacrificing the health of Colombian children in its quixotic anti-drug crusade ... Laurie Hiett, the wife of Colonel James Hiett, the top U.S. military commander in Colombia, exploited U.S. embassy diplomatic pouches to ship 15 pounds of heroin and cocaine to New York. She pocketed tens of thousands of dollars in narcotic profits. After she was caught and convicted, she received far more lenient treatment than most drug offenders – only five years in prison. Her husband – ridiculed as the “Coke Colonel” in the New York Post – received only six months in prison for laundering drug proceeds and concealing his wife’s crimes.

Note: Aerial spraying of pesticides is labeled as a public-health and anti-drug intervention designed to eradicate coca crops. The War on Drugs has been called a trillion dollar failure that targets everyday people while protecting the covert activities of the rich and powerful. See our in-depth investigation into the dark truths behind the War on Drugs.

Those who have kept track of the rise of the Thielverse, which includes figures such as Peter Thiel, Elon Musk and JD Vance, have understood that an agenda to usher in a unique form of authoritarianism has been slowly introduced into the mainstream political atmosphere. “I think now it’s quite clear that this is the PayPal Mafia’s moment. These particular figures have had an extremely significant influence on US government policy since January, including the extreme distribution of AI throughout the US government,” [investigative journalist Whitney] Webb explains. It’s clear that the architects of mass surveillance and the military industrial complex are beginning to coalesce in unprecedented ways within the Trump administration and Webb emphasizes that now is the time to pay attention and push back against these new forces. If they have their way, all commercial technology will be completely folded into the national security state — acting blatantly as the new infrastructure for techno-authoritarian rule. The underlying idea behind this new system is “pre-crime,” or the use of mass surveillance to designate people criminals before they’ve committed any crime. Webb warns that the Trump administration and its benefactors will demonize segments of the population to turn civilians against each other, all in pursuit of building out this elaborate system of control right under our noses.

Note: Read about Peter Thiel's involvement in the military origins of Facebook. For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

“Ice is just around the corner,” my friend said, looking up from his phone. A day earlier, I had met with foreign correspondents at the United Nations to explain the AI surveillance architecture that Immigration and Customs Enforcement (Ice) is using across the United States. The law enforcement agency uses targeting technologies which one of my past employers, Palantir Technologies, has both pioneered and proliferated. Technology like Palantir’s plays a major role in world events, from wars in Iran, Gaza and Ukraine to the detainment of immigrants and dissident students in the United States. Known as intelligence, surveillance, target acquisition and reconnaissance (Istar) systems, these tools, built by several companies, allow users to track, detain and, in the context of war, kill people at scale with the help of AI. They deliver targets to operators by combining immense amounts of publicly and privately sourced data to detect patterns, and are particularly helpful in projects of mass surveillance, forced migration and urban warfare. Also known as “AI kill chains”, they pull us all into a web of invisible tracking mechanisms that we are just beginning to comprehend, yet are starting to experience viscerally in the US as Ice wields these systems near our homes, churches, parks and schools. The dragnets powered by Istar technology trap more than migrants and combatants ... in their wake. They appear to violate first and fourth amendment rights.

Note: Read how Palantir helped the NSA and its allies spy on the entire planet. Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on AI and Big Tech.

Local cops have gotten tens of millions of dollars’ worth of discounted military gear under a secretive federal program that is poised to grow under recent executive action. The 1122 program ... presents a danger to people facing off against militarized cops, according to Women for Weapons Trade Transparency. “All of these things combined serve as a threat to free speech, an intimidation tactic to protest,” said Lillian Mauldin, the co-founder of the nonprofit group, which produced the report released this week. The federal government’s 1033 program ... has long sent surplus gear like mine-resistant vehicles and bayonets to local police. Since 1994, however, the even more obscure 1122 program has allowed local cops to purchase everything from uniforms to riot shields at federal government rates. The program turns the feds into purchasing agents for local police. Local cops have used the program to pick up 16 Lenco BearCats, fearsome-looking armored police vehicles. Those vehicles represented 4.8 percent of the total spending identified in the ... report. Surveillance gear and software represented another 6.4 percent, and weapons or riot gear represented 5 percent. One agency bought a $428,000 Star Safire thermal imaging system, the kind used in military helicopters. The Texas Department of Public Safety’s intelligence and counterterrorism unit purchased a $1.5 million surveillance software license. Another agency bought an $89,000 covert camera system.

Note: Read more about the Pentagon's 1033 program. For more along these lines, read our concise summaries of news articles on police corruption and the erosion of civil liberties.

The United States has long used drone strikes to take out people it alleges are terrorists or insurgents. President Donald Trump has taken this tactic to new extremes, boasting about lethal strikes against alleged drug boats in the Caribbean and declaring the U.S. is in a “non-international armed conflict” with narcotics traffickers. Trump appears to be merging the war on terror with the war on drugs. This comes as he’s simultaneously ramping up the use of troops to police inside American cities. The modern drug war began during President Richard Nixon’s administration. In 1994, the journalist Dan Baum tracked down Nixon aide John Ehrlichman and interviewed him. He said, “Look. The Nixon campaign in ’68 and the Nixon White House had two enemies: Black people and the antiwar left. [V]ilify them night after night on the evening news, and we thought if we can associate heroin with Black people in the public mind and marijuana with the hippies this would be perfect.” And [Ehrlichman] said, “Did we know we were lying about the drugs? Of course we did.” This line of thinking drove policies designed to “unleash” law enforcement. The Nixon administration tried to relax wiretapping laws, roll back Miranda rights, and erode Fourth Amendment protections against unconstitutional searches and seizures. And now we’re seeing the Trump administration push even harder to roll back constitutional protections.

Note: Though President Richard Nixon launched the War on Drugs by declaring drugs “public enemy No. 1,” secretly he admitted in a 1973 Oval Office meeting that marijuana was “not particularly dangerous.” The War on Drugs is a trillion dollar failure that has been made worse by every presidential administration since Nixon. Don't miss our in-depth investigation into the dark truths behind the War on Drugs. For more along these lines, read our concise summaries of news articles on military corruption and the War on Drugs.

Seth Harp’s The Fort Bragg Cartel: Drug Trafficking and Murder in the Special Forces [is] an exposé of the criminality and violence carried out by returning Special Forces personnel in American communities. We’re in the middle of a political crisis right now in which the military’s role is being radically expanded, including into US domestic life, all on the basis of fighting crime and drugs, and drugs being a national security threat. Yet ... damaged soldiers end up carrying out crime and violence at home as well as getting involved in the drug trade. Todd Michael Fulkerson, a Green Beret who was trained at Bragg, was convicted earlier this year of trafficking narcotics with the Sinaloa cartel. Another guy, Jorge Esteban Garcia, who was the top career counselor at Fort Bragg for twenty years — his job was to mentor and coach retiring soldiers on their career prospects — was literally recruiting for a cartel and was convicted of trafficking methamphetamine and supporting a violent extremist organization. And then a group of soldiers in the 44th Medical Brigade at Fort Bragg — all these soldiers are at Fort Bragg — were convicted of trafficking massive amounts of ketamine. You can look at every single region of the world that’s a massive drug production center — which there really are not that many of them — and in every case, you can see that US military intervention preceded the country’s becoming a narco state, not the other way around.

Note: Don't miss our in-depth investigation into the dark truths behind the War on Drugs. For more along these lines, read our concise summaries of news articles on military corruption and the War on Drugs.

The European Union expects Georgia to change radically to accommodate the EU. The Georgian government expects the EU to change radically to accommodate Georgia. What brought matters to a head between Georgia and the EU was the Russian invasion of Ukraine in 2022. The Georgian government condemned the invasion, sent humanitarian aid to Ukraine and imposed certain sanctions on Russia. However, it tried to block Georgian volunteers going to Ukraine to fight and rejected Western pressure to send military aid and to impose the full range of EU sanctions, leading to fresh accusations of being “pro-Russian.” On this, President Kavelashvili pushed back very strongly. He accused the West of trying to provoke a new war with Russia that would be catastrophic for Georgia. Georgia has a government that represents the interests of our people…the same media outlets that accuse us of being under Russian influence tell the same lie about President Trump,” [he said]. President Kavelashvili accused the U.S. “deep state” and organizations like USAID, the National Endowment for Democracy, and the European Parliament of mobilizing the Georgian opposition to this end; “but despite all this pressure, we stood and continue to stand as guardians of Georgian national interest and of Georgian economic growth” — the latter comment a veiled reference to the very important economic links between Georgia and Russia.

Note: Our Substack, Working Together To End the War On Peace in Ukraine, challenges the dominant narrative on the Ukraine war, arguing that US and NATO policies, wartime corruption, media censorship, and corporate profiteering have fueled the conflict while blocking genuine peace efforts. Learn more about how war is a tool for hidden agendas in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on war.

You are a fisherman. Suddenly, you die. A man you have never met and whose presence you did not know about has shot you with his rifle. His companions stab your lungs so that your body will sink to the bottom of the sea. Your family will likely never know what happened to you. That is what happened to a group of unnamed North Korean fishermen who accidentally stumbled upon a detachment of U.S. Navy SEALs in 2019. The commandos had set out to install a surveillance device to wiretap government communications in North Korea. When they stumbled upon an unexpected group of divers on a boat, the SEALs killed everyone on board and retreated. The U.S. government concluded that the victims were "civilians diving for shellfish." Officials didn't even know how many, telling the [New York] Times that it was "two or three people," even though the SEALs had searched the boat and disposed of the bodies. The mission wasn't just an intelligence failure. It was a failure that killed real people. The U.S. government "often" hides the failures of special operations from policymakers. Seth Harp, author of The Fort Bragg Cartel, roughly estimates that Joint Special Operations Command killed 100,000 people during the Iraq War "surge" from 2007 to 2009. The secrecy around America's spying-and-assassination complex makes it impossible to know how many of those people were simply in the wrong place at the wrong time.

Note: For more along these lines, read our concise summaries of news articles on military corruption.

Digital technology was sold as a liberating tool that could free individuals from state power. Yet the state security apparatus always had a different view. The Prism leaks by whistleblower Edward Snowden in 2013 revealed a deep and almost unconditional cooperation between Silicon Valley firms and security apparatuses of the state such as the National Security Agency (NSA). People realized that basically any message exchanged via Big Tech firms including Google, Facebook, Microsoft, Apple, etc. could be easily spied upon with direct backdoor access: a form of mass surveillance with few precedents ... especially in nominally democratic states. The leaks prompted outrage, but eventually most people preferred to look away. The most extreme case is the surveillance and intelligence firm Palantir. Its service is fundamentally to provide a more sophisticated version of the mass surveillance that the Snowden leaks revealed. In particular, it endeavors to support the military and police as they aim to identify and track various targets — sometimes literal human targets. Palantir is a company whose very business is to support the security state in its most brutal manifestations: in military operations that lead to massive loss of life, including of civilians, and in brutal immigration enforcement [in] the United States. Unfortunately, Palantir is but one part of a much broader military-information complex, which is becoming the axis of the new Big Tech Deep State.

Note: For more along these lines, read our concise summaries of news articles on corruption in the intelligence community and in Big Tech.

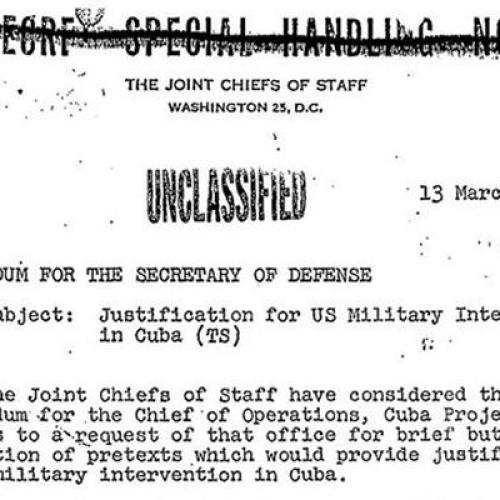

It’s no secret that the US government sought to assassinate Fidel Castro for years. Less well known, however, was that part of their regime-change plot included a plan to blow up Miami and sinking a boat-full of innocent Cubans. The plan, which was revealed in 2017 when the National Archives declassified 2,800 documents from the JFK era, was a collaborative effort that included the CIA, the State Department, the Department of Defense, and other federal agencies that sought to brainstorm strategies to topple Castro and sow unrest within Cuba. One of those plans included Operation Northwoods, submitted to the CIA by General Lyman Lemnitzer on behalf of the Joint Chiefs of Staff. One of the official CIA documents shows officials musing about staging a terror campaign (“real or simulated”) and blaming it on Cuban refugees. In the summer of 2014, the CIA’s inspector general concluded that the CIA had “improperly” spied on US Senate staffers who were researching the agency’s black history of torture. CIA officers ... also sent a criminal referral to the Justice Department based on false information. In the wake of 9/11, the FBI has, on numerous occasions, targeted unstable and mentally ill individuals, sending informants to bait them into committing terror attacks. Before these individuals can actually carry out the attack, however, the Bureau intervenes, presenting the foiled plot to the public as a successfully thwarted attack.

Note: Read more about Operation Northwoods. Learn more about the rise of the CIA in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on intelligence agency corruption.

The Trump administration is evaluating plans that would establish a “Domestic Civil Disturbance Quick Reaction Force” composed of hundreds of National Guard troops tasked with rapidly deploying into American cities facing protests or other unrest, according to internal Pentagon documents reviewed by The Washington Post. The plan calls for 600 troops to be on standby at all times so they can deploy in as little as one hour, the documents say. They would be split into two groups of 300 and be stationed at military bases in Alabama and Arizona, with purview of regions east and west of the Mississippi River, respectively. Cost projections outlined in the documents indicate that such a mission, if the proposal is adopted, could stretch into the hundreds of millions of dollars. Trump has summoned the military for domestic purposes like few of his predecessors have. He did so most recently Monday, authorizing the mobilization of 800 D.C. National Guard troops to bolster enhanced law enforcement activity in Washington. The proposal represents a major departure in how the National Guard traditionally has been used, said Lindsay P. Cohn, an associate professor of national security affairs at the U.S. Naval War College. While it is not unusual for National Guard units to be deployed for domestic emergencies within their states, including for civil disturbances, this “is really strange because essentially nothing is happening,” she said.

Note: For more along these lines, read our concise summaries of news articles on military corruption and the erosion of civil liberties.

What began as a fairly small protest against an Immigration and Customs Enforcement (ICE) raid at an apparel manufacturer in the Fashion District in downtown Los Angeles on June 6, led to an immediate response by federal agents in riot gear. [On June 7], President Donald Trump ... called in the National Guard. The deployment of troops in Los Angeles is the brutal culmination of a yearslong campaign to systematically erode and circumscribe public assembly rights, enabled by both Democrats and Republicans at all levels of government. Political scientists call this “democratic backsliding”: the gradual erosion of basic rights, civil liberties, and other political institutions that allow the public to hold the government to account. This war on dissent is the most visible sign of democratic backsliding in the U.S. By using the National Guard to silence dissent in Los Angeles, the Trump administration is eroding a core pillar of democracy: the right to assemble in public to express opinions contrary to government action and to advocate for change. U.S. police forces developed [an] approach to public order policing called “negotiated management” in the 1980 and 1990s. Under negotiated management, police tried to respect the right of public assembly. However, in response to the anti-globalization protests at the 1999 World Trade Organization meeting in Seattle ... police shifted to a new set of tactics called “strategic incapacitation” that would provide them with more control.

Note: For more along these lines, read our concise summaries of news articles on military corruption and the erosion of civil liberties.

A new Pentagon report offers the grimmest assessment yet of the results of the last 10 years of U.S. military efforts [in Africa]. It corroborates years of reporting on catastrophes that U.S. Africa Command has long attempted to ignore or cover up. Fatalities from militant Islamist violence spiked over the years of America’s most vigorous counterterrorism efforts on the continent, with the areas of greatest U.S. involvement — Somalia and the West African Sahel — suffering the worst outcomes. “Africa has experienced roughly 155,000 militant Islamist group-linked deaths over the past decade,” reads a new report by the Africa Center for Strategic Studies. “What many people don’t know is that the United States’ post-9/11 counterterrorism operations actually contributed to and intensified the present-day crisis,” [said] Stephanie Savell, director of the Costs of War Project at Brown University. The U.S. provided tens of millions of dollars in weapons and training to the governments of countries like Burkina Faso and Niger, which are experiencing the worst spikes in violent deaths today, she said. In 2002 and 2003 ... the State Department counted a total of just nine terrorist attacks, resulting in a combined 23 casualties across the entire continent. Last year, there were 22,307 fatalities from militant Islamist violence in Africa. At least 15 officers who benefited from U.S. security assistance were key leaders in a dozen coups in West Africa and the greater Sahel.

Note: Read more about the Pentagon's recent military failures in Africa. Learn more about how war is a tool for hidden agendas in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on military corruption.

Thousands of federal troops have been deployed to Los Angeles since June 7 on the orders of President Donald Trump. The more than 5,000 National Guard soldiers and Marines ... were sent to “protect the safety and security of federal functions, personnel, and property.” In practice, this has mostly meant guarding federal buildings across LA from protests. Since Trump called up the troops on June 7, they have carried out exactly one temporary detainment. The deployments are expected to cost the public hundreds of millions of dollars. Troops were sent to LA over the objections of local officials and California Gov. Gavin Newsom. In addition to guarding federal buildings, troops have also recently participated in raids alongside camouflage-clad ICE agents. “To have armored vehicles deployed on the streets of our city, to federalize the National Guard, to have the U.S. Marines who are trained to kill abroad, deployed to our city — all of this is outrageous and it is un-American,” Los Angeles Mayor Karen Bass [said]. California National Guard soldiers also backed ICE raids on state-licensed marijuana nurseries last week. The troops took part in the military-style assaults on two locations. ICE detained more than 200 people, including U.S. citizens, during the joint operations. One man, Jaime Alanís Garcia, died. Experts say that the introduction of military troops into civilian law enforcement support further strains civil-military relations and risks violation of the Posse Comitatus Act.

Note: According to the Brennan Center for Justice, this use of federal troops for civilian law enforcement is likely illegal under the Posse Comitatus Act because it wasn't "expressly authorized by the Constitution or Act of Congress." The systematic militarization of domestic police forces is well-reported, and has been going on for years. Now, the National Guard is increasingly being trained to treat protesters like enemy troops. What happens to civil liberties when civil society is viewed by authorities as a battle-front?

Americans working for a little known U.S.-based private military contractor have begun to come forward to media and members of Congress with charges that their work has involved using live ammunition for crowd control and other abusive measures against unarmed civilians seeking food at controversial food distribution sites run by the Global Humanitarian Fund (GHF) in Gaza. UG Solutions was hired by the GHF to secure and deliver food into Gaza. Israel put GHF in control of what used to be the UN-led aid mission. The UN, ... has called the new model an "abomination" which “provides nothing but starvation and gunfire to the people of Gaza,” referring to the 1000 Gazans who have been killed near or at the GHF centers since May. The Israeli Defense Forces (IDF) have been accused of shooting and shelling unarmed civilians. The American contractors say they have witnessed it and have been told to use live ammunition in their own crowd control efforts. UG Solutions is a mercenary group. They are not a party to the conflict in Gaza, were recruited to participate in hostilities, were not sent by the U.S. government, are not a national of a party in the conflict, are not part of a military, and are there for personal gain. Similar to Blackwater, they are primarily doing defensive operations and the U.S. State Department has helped fund the GHF but they are headquartered in the U.S. working for a foreign entity, in a combat zone, for money.

Note: Learn more about human rights abuses during wartime in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on war.

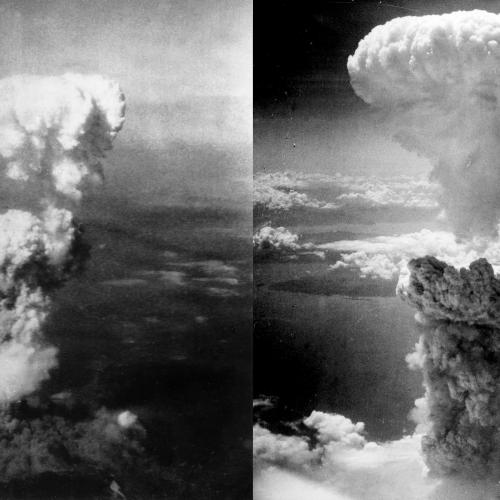

In 1945, the horrors unleashed in Hiroshima and Nagasaki were largely hidden from the outside world. Eighty years later, thanks to the testimonies shared by those who survived the atomic bombings of my country, we have a window into the truth of what happened on those dark August days when weapons of previously unimaginable power destroyed our cities. We also know what happened over the torturous months and years that followed, as those who weren’t immediately burned alive succumbed to radiation poisoning and cancer. Nuclear bombing survivors have helped ... fuel public demand for post-Cold War arms-control treaties that resulted in significant stockpile reductions in the United States and Russia. They helped persuade nuclear-armed countries to stop explosive weapons tests that caused grave harm to the environment and to the servicemembers and civilians involved. They worked to establish the “nuclear taboo” that has spared the use of nuclear weapons in warfare for eight decades. They delivered millions of petition signatures to the United Nations that helped ... reduce nuclear risks. Again and again, they have proved that progress is possible, and for their decades of work to ensure that ... no country ever again face the unthinkable, the survivors in 2024 were awarded the Nobel Peace Prize. Demanding a nuclear-free world isn’t naive. True naivete is believing that weapons designed to annihilate cities will keep us safe.

Note: Learn more about war failures and lies in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on military corruption.

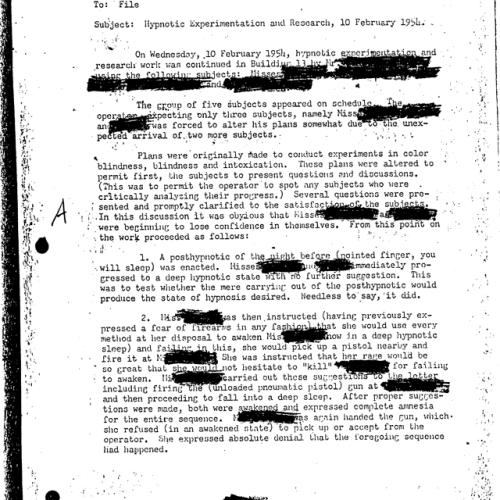

Between 1953 and 1973, MKUltra, a secret CIA program, explored ... mind-control drugs that the U.S. could use as weapons. By drugging civilians and government workers (without consent), the program’s researchers wanted to observe the effects of the drugs like LSD, ultimately hoping to make people biddable to carry out tasks like secret assassinations. Details of MKUltra began to emerge in 1974, when a New York Times story exposed the CIA’s unethical and illegal practices, leading to a senate investigation and public revelations. The full extent of MKUltra’s activities will likely remain a mystery, since CIA director Richard Helms ordered all of the program’s records to be destroyed the year before. J. Edgar Hoover’s FBI launched the Counterintelligence Program, or COINTELPRO, in the thick of the Cold War. Its objective: mitigate the Communist Party of the United States’s influence. COINTELPRO used a range of tactics to surveil and sabotage its targets, such as undermining them in the public eye or sowing conflict to weaken them. The program gradually broadened its scope to include ... leading activists in the civil rights movement––including Martin Luther King, Jr. As the U.S. built a stockpile of nuclear arms during the Cold War, a new risk emerged: broken arrow incidents in which nuclear weapons are stolen, lost, or mishandled. America has officially owned up to 32 broken arrow incidents.

Note: Read our comprehensive Substack investigation that uncovers the dark truths behind the assassination of Martin Luther King Jr. Learn more about the MKUltra Program in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on intelligence agency corruption.

Important Note: Explore our full index to revealing excerpts of key major media news stories on several dozen engaging topics. And don't miss amazing excerpts from 20 of the most revealing news articles ever published.