Big Tech News Stories

In his 1963 scifi story “The Invincible,” the Polish writer Stanisaw Lem imagined an artificial species of free-floating nanobots which roamed the atmosphere of a far-off planet. Like tiny bugs, the microscopic beings were powerless alone, but together they could form cooperative swarms to gather energy, reproduce, and ultimately defend their territory from predators with deadly force. Lem probably never imagined his evolutionary parable of living dust was just a few decades from becoming a reality — or that it would become the inspiration for the development of a real-life military technology known as “smart dust.” Starting out as a theoretical research proposal to the Defense Advanced Research Projects Agency (DARPA) ... smart dust is now being developed for use in a wide variety of industries, from environmental studies to commercial mining. That’s according to Interesting Engineering, which recently published a rundown of the state of present-day smart dust after decades of development. Though “dust” remains a bit of a misnomer — it’s more like a bunch of tiny sensors capable of delaying data to a central device — there’s a large body of theoretical and simulated work laying a path for practical microengineering that’s steadily coming into its own. In the future, [smart dust is] hoped to be able to report a near-infinite amount of data in suspended, 3D environments. The current “smart dust industry” ... was valued at around $115 million in 2022.

Note: Smart dust can theoretically be used to spy on human thought. For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

AI could mean fewer body bags on the battlefield — but that's exactly what terrifies the godfather of AI. Geoffrey Hinton, the computer scientist known as the "godfather of AI," said the rise of killer robots won't make wars safer. It will make conflicts easier to start by lowering the human and political cost of fighting. Hinton said ... that "lethal autonomous weapons, that is weapons that decide by themselves who to kill or maim, are a big advantage if a rich country wants to invade a poor country." "The thing that stops rich countries invading poor countries is their citizens coming back in body bags," he said. "If you have lethal autonomous weapons, instead of dead people coming back, you'll get dead robots coming back." That shift could embolden governments to start wars — and enrich defense contractors in the process, he said. Hinton also said AI is already reshaping the battlefield. "It's fairly clear it's already transformed warfare," he said, pointing to Ukraine as an example. "A $500 drone can now destroy a multimillion-dollar tank." Traditional hardware is beginning to look outdated, he added. "Fighter jets with people in them are a silly idea now," Hinton said. "If you can have AI in them, AIs can withstand much bigger accelerations — and you don't have to worry so much about loss of life." One Ukrainian soldier who works with drones and uncrewed systems [said] in a February report that "what we're doing in Ukraine will define warfare for the next decade."

Note: As law expert Dr. Salah Sharief put it, "The detached nature of drone warfare has anonymized and dehumanized the enemy, greatly diminishing the necessary psychological barriers of killing." For more, read our concise summaries of news articles on AI and warfare technology.

Senior officials in the Biden administration, including some White House officials, "conducted repeated and sustained outreach" and "pressed" Google- and YouTube parent-company Alphabet "regarding certain user-generated content related to the COVID-19 pandemic that did not violate [Alphabet's] policies," the company revealed yesterday. While Alphabet "continued to develop and enforce its policies independently, Biden Administration officials continued to press [Alphabet] to remove non-violative user-generated content," a lawyer for Alphabet wrote in a September 23 letter to House Judiciary Committee Chairman Jim Jordan. Administration officials including Biden "created a political atmosphere that sought to influence the actions" of private tech platforms regarding the moderation of misinformation. This is what has come to be known as "jawboning," and the fact that it doesn't involve direct censorship may make it even more insidious. Direct censorship can be challenged in court. This sort of wink-and-nod regulation of speech leaves companies and their users with little recourse. What's more, each time authorities stray from the spirit of the First Amendment, it makes it that much easier for future authorities to do so. And each time Democrats (or Republicans) use government power to try and suppress free speech, it gives them even less standing to say it's wrong when their opponents do that.

Note: Read more about the sprawling federal censorship enterprise that took shape during the Biden administration. For more along these lines, read our concise summaries of news articles on censorship and government corruption.

Those who have kept track of the rise of the Thielverse, which includes figures such as Peter Thiel, Elon Musk and JD Vance, have understood that an agenda to usher in a unique form of authoritarianism has been slowly introduced into the mainstream political atmosphere. “I think now it’s quite clear that this is the PayPal Mafia’s moment. These particular figures have had an extremely significant influence on US government policy since January, including the extreme distribution of AI throughout the US government,” [investigative journalist Whitney] Webb explains. It’s clear that the architects of mass surveillance and the military industrial complex are beginning to coalesce in unprecedented ways within the Trump administration and Webb emphasizes that now is the time to pay attention and push back against these new forces. If they have their way, all commercial technology will be completely folded into the national security state — acting blatantly as the new infrastructure for techno-authoritarian rule. The underlying idea behind this new system is “pre-crime,” or the use of mass surveillance to designate people criminals before they’ve committed any crime. Webb warns that the Trump administration and its benefactors will demonize segments of the population to turn civilians against each other, all in pursuit of building out this elaborate system of control right under our noses.

Note: Read about Peter Thiel's involvement in the military origins of Facebook. For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

“Ice is just around the corner,” my friend said, looking up from his phone. A day earlier, I had met with foreign correspondents at the United Nations to explain the AI surveillance architecture that Immigration and Customs Enforcement (Ice) is using across the United States. The law enforcement agency uses targeting technologies which one of my past employers, Palantir Technologies, has both pioneered and proliferated. Technology like Palantir’s plays a major role in world events, from wars in Iran, Gaza and Ukraine to the detainment of immigrants and dissident students in the United States. Known as intelligence, surveillance, target acquisition and reconnaissance (Istar) systems, these tools, built by several companies, allow users to track, detain and, in the context of war, kill people at scale with the help of AI. They deliver targets to operators by combining immense amounts of publicly and privately sourced data to detect patterns, and are particularly helpful in projects of mass surveillance, forced migration and urban warfare. Also known as “AI kill chains”, they pull us all into a web of invisible tracking mechanisms that we are just beginning to comprehend, yet are starting to experience viscerally in the US as Ice wields these systems near our homes, churches, parks and schools. The dragnets powered by Istar technology trap more than migrants and combatants ... in their wake. They appear to violate first and fourth amendment rights.

Note: Read how Palantir helped the NSA and its allies spy on the entire planet. Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on AI and Big Tech.

As scientists who have worked on the science of solar geoengineering for decades, we have grown increasingly concerned about the emerging efforts to start and fund private companies to build and deploy technologies that could alter the climate of the planet. The basic idea behind solar geoengineering, or what we now prefer to call sunlight reflection methods (SRM), is that humans might reduce climate change by making the Earth a bit more reflective, partially counteracting the warming caused by the accumulation of greenhouse gases. Many people already distrust the idea of engineering the atmosphere—at whichever scale—to address climate change, fearing negative side effects, inequitable impacts on different parts of the world, or the prospect that a world expecting such solutions will feel less pressure to address the root causes of climate change. Notably, Stardust says on its website that it has developed novel particles that can be injected into the atmosphere to reflect away more sunlight, asserting that they’re “chemically inert in the stratosphere, and safe for humans and ecosystems.” But it’s nonsense for the company to claim they can make particles that are inert in the stratosphere. Even diamonds, which are extraordinarily nonreactive, would alter stratospheric chemistry. Any particle may become coated by background sulfuric acid in the stratosphere. That could accelerate the loss of the protective ozone layer.

Note: Modifying the atmosphere to dim the sun involves catastrophic risks. Regenerative farming is far safer and more promising for stabilizing the climate. In our latest Substack, "Geoengineering is a Weapon That's Been Rebranded as Climate Science. There's a Better Way To Heal the Earth," we present credible evidence and current information showing that weather modification technologies are not only real, but that they are being secretly propagated by multiple groups with differing agendas.

Mark Zuckerberg is said to have started work on Koolau Ranch, his sprawling 1,400-acre compound on the Hawaiian island of Kauai, as far back as 2014. It is set to include a shelter, complete with its own energy and food supplies, though the carpenters and electricians working on the site were banned from talking about it. Asked last year if he was creating a doomsday bunker, the Facebook founder gave a flat "no". The underground space spanning some 5,000 square feet is, he explained, "just like a little shelter, it's like a basement". Other tech leaders ... appear to have been busy buying up chunks of land with underground spaces, ripe for conversion into multi-million pound luxury bunkers. Reid Hoffman, the co-founder of LinkedIn, has talked about "apocalypse insurance". So, could they really be preparing for war, the effects of climate change, or some other catastrophic event the rest of us have yet to know about? The advancement of artificial intelligence (AI) has only added to that list of potential existential woes. Ilya Sutskever, chief scientist and a co-founder of Open AI, is reported to be one of them. Mr Sutskever was becoming increasingly convinced that computer scientists were on the brink of developing artificial general intelligence (AGI). In a meeting, Mr Sutskever suggested to colleagues that they should dig an underground shelter for the company's top scientists before such a powerful technology was released on the world.

Note: Read how some doomsday preppers are rejecting isolating bunkers in favor of community building and mutual aid. For more along these lines, read our concise summaries of news articles on financial inequality.

In July, US group Delta Air Lines revealed that approximately 3 percent of its domestic fare pricing is determined using artificial intelligence (AI) – although it has not elaborated on how this happens. The company said it aims to increase this figure to 20 percent by the end of this year. According to former Federal Trade Commission Chair Lina Khan ... some companies are able to use your personal data to predict what they know as your “pain point” – the maximum amount you’re willing to spend. In January, the US’s Federal Trade Commission (FTC), which regulates fair competition, reported on a surveillance pricing study it carried out in July 2024. It found that companies can collect data directly through account registrations, email sign-ups and online purchases in order to do this. Additionally, web pixels installed by intermediaries track digital signals including your IP address, device type, browser information, language preferences and “granular” website interactions such as mouse movements, scrolling patterns and video viewing behaviour. This is known as “surveillance pricing”. The FTC Surveillance Pricing report lists several ways in which consumers can protect their data. These include using private browsers to do your online shopping, opting out of consumer tracking where possible, clearing the cookies in your history or using virtual private networks (VPNs) to shield your data from being collected.

Note: For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

Larry Ellison, the billionaire cofounder of Oracle ... said AI will usher in a new era of surveillance that he gleefully said will ensure "citizens will be on their best behavior." Ellison made the comments as he spoke to investors earlier this week during an Oracle financial analysts meeting, where he shared his thoughts on the future of AI-powered surveillance tools. Ellison said AI would be used in the future to constantly watch and analyze vast surveillance systems, like security cameras, police body cameras, doorbell cameras, and vehicle dashboard cameras. "We're going to have supervision," Ellison said. "Every police officer is going to be supervised at all times, and if there's a problem, AI will report that problem and report it to the appropriate person. Citizens will be on their best behavior because we are constantly recording and reporting everything that's going on." Ellison also expects AI drones to replace police cars in high-speed chases. "You just have a drone follow the car," Ellison said. "It's very simple in the age of autonomous drones." Ellison's company, Oracle, like almost every company these days, is aggressively pursuing opportunities in the AI industry. It already has several projects in the works, including one in partnership with Elon Musk's SpaceX. Ellison is the world's sixth-richest man with a net worth of $157 billion.

Note: As journalist Kenan Malik put it, "The problem we face is not that machines may one day exercise power over humans. It is rather that we already live in societies in which power is exercised by a few to the detriment of the majority, and that technology provides a means of consolidating that power." Read about the shadowy companies tracking and trading your personal data, which isn't just used to sell products. It's often accessed by governments, law enforcement, and intelligence agencies, often without warrants or oversight. For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

In an exchange this week on “All-In Podcast,” Alex Karp was on the defensive. The Palantir CEO used the appearance to downplay and deny the notion that his company would engage in rights-violating in surveillance work. “We are the single worst technology to use to abuse civil liberties, which is by the way the reason why we could never get the NSA or the FBI to actually buy our product,” Karp said. What he didn’t mention was the fact that a tranche of classified documents revealed by [whistleblower and former NSA contractor] Edward Snowden and The Intercept in 2017 showed how Palantir software helped the National Security Agency and its allies spy on the entire planet. Palantir software was used in conjunction with a signals intelligence tool codenamed XKEYSCORE, one of the most explosive revelations from the NSA whistleblower’s 2013 disclosures. XKEYSCORE provided the NSA and its foreign partners with a means of easily searching through immense troves of data and metadata covertly siphoned across the entire global internet, from emails and Facebook messages to webcam footage and web browsing. A 2008 NSA presentation describes how XKEYSCORE could be used to detect “Someone whose language is out of place for the region they are in,” “Someone who is using encryption,” or “Someone searching the web for suspicious stuff.” In May, the New York Times reported Palantir would play a central role in a White House plan to boost data sharing between federal agencies, “raising questions over whether he might compile a master list of personal information on Americans that could give him untold surveillance power.”

Note: Read about Palantir's revolving door with the US government. As former NSA intelligence official and whistleblower William Binney articulated, "The ultimate goal of the NSA is total population control." For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

Meta whistleblower Sarah Wynn-Williams, the former director of Global Public Policy for Facebook and author of the recently released tell-all book “Careless People,” told U.S. senators ... that Meta actively targeted teens with advertisements based on their emotional state. In response to a question from Sen. Marsha Blackburn (R-TN), Wynn-Williams admitted that Meta (which was then known as Facebook) had targeted 13- to 17-year-olds with ads when they were feeling down or depressed. “It could identify when they were feeling worthless or helpless or like a failure, and [Meta] would take that information and share it with advertisers,” Wynn-Williams told the senators on the subcommittee for crime and terrorism. “Advertisers understand that when people don’t feel good about themselves, it’s often a good time to pitch a product — people are more likely to buy something.” She said the company was letting advertisers know when the teens were depressed so they could be served an ad at the best time. As an example, she suggested that if a teen girl deleted a selfie, advertisers might see that as a good time to sell her a beauty product as she may not be feeling great about her appearance. They also targeted teens with ads for weight loss when young girls had concerns around body confidence. If Meta was willing to target teens based on their emotional states, it stands to reason they’d do the same to adults. One document displayed during the hearing showed an example of just that.

Note: Facebook hid its own internal research for years showing that Instagram worsened body image issues, revealing that 13% of British teenage girls reported more frequent suicidal thoughts after using the app. For more along these lines, read our concise summaries of news articles on Big Tech and mental health.

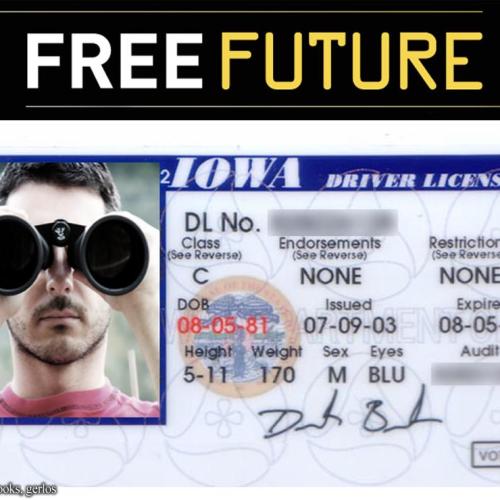

There has been a surge of concern and interest in the threat of “surveillance pricing,” in which companies leverage the enormous amount of detailed data they increasingly hold on their customers to set individualized prices for each of them — likely in ways that benefit the companies and hurt their customers. The central battle in such efforts will be around identity: do the companies whose prices you are checking or negotiating know who you are? Can you stop them from knowing who you are? Unfortunately, one day not too far in the future, you may lose the ability to do so. Many states around the country are creating digital versions of their state driver’s licenses. Digital versions of IDs allow people to be tracked in ways that are not possible or practical with physical IDs — especially since they are being designed to work ... online. It will be much easier for companies to request — and eventually demand — that people share their IDs in order to engage in all manner of transactions. It will make it easier for companies to collect data about us, merge it with other data, and analyze it, all with high confidence that it pertains to the same person — and then recognize us ... and execute their price-maximizing strategy against us. Not only would digital IDs prevent people from escaping surveillance pricing, but surveillance pricing would simultaneously incentivize companies to force the presentation of digital IDs by people who want to shop.

Note: For more along these lines, read our concise summaries of news articles on corporate corruption and the disappearance of privacy.

Loneliness not only affects how we feel in the moment but can leave lasting imprints on our personality, physiology, and even the way our brains process the social world. A large study of older adults [found] that persistent loneliness predicted declines in extraversion, agreeableness, and conscientiousness—traits associated with sociability, kindness, and self-discipline. At the same time, higher levels of neuroticism predicted greater loneliness in the future, suggesting a self-reinforcing cycle. Although social media promises connection, a large-scale study published in Personality and Social Psychology Bulletin suggests that it may actually fuel feelings of loneliness over time. Researchers found that both passive (scrolling) and active (posting and commenting) forms of social media use predicted increases in loneliness. Surprisingly, even active engagement—often believed to foster interaction—was associated with growing disconnection. Even more concerning was the feedback loop uncovered in the data: loneliness also predicted increased social media use over time, suggesting that people may turn to these platforms for relief, only to find themselves feeling even more isolated. Lonely individuals also showed greater activation in areas tied to negative emotions, such as the insula and amygdala. This pattern suggests that lonely people may be more sensitive to social threat or negativity, which could contribute to feeling misunderstood or excluded.

Note: For more along these lines, read our concise summaries of news articles on mental health and Big Tech.

Digital technology was sold as a liberating tool that could free individuals from state power. Yet the state security apparatus always had a different view. The Prism leaks by whistleblower Edward Snowden in 2013 revealed a deep and almost unconditional cooperation between Silicon Valley firms and security apparatuses of the state such as the National Security Agency (NSA). People realized that basically any message exchanged via Big Tech firms including Google, Facebook, Microsoft, Apple, etc. could be easily spied upon with direct backdoor access: a form of mass surveillance with few precedents ... especially in nominally democratic states. The leaks prompted outrage, but eventually most people preferred to look away. The most extreme case is the surveillance and intelligence firm Palantir. Its service is fundamentally to provide a more sophisticated version of the mass surveillance that the Snowden leaks revealed. In particular, it endeavors to support the military and police as they aim to identify and track various targets — sometimes literal human targets. Palantir is a company whose very business is to support the security state in its most brutal manifestations: in military operations that lead to massive loss of life, including of civilians, and in brutal immigration enforcement [in] the United States. Unfortunately, Palantir is but one part of a much broader military-information complex, which is becoming the axis of the new Big Tech Deep State.

Note: For more along these lines, read our concise summaries of news articles on corruption in the intelligence community and in Big Tech.

AI’s promise of behavior prediction and control fuels a vicious cycle of surveillance which inevitably triggers abuses of power. The problem with using data to make predictions is that the process can be used as a weapon against society, threatening democratic values. As the lines between private and public data are blurred in modern society, many won’t realize that their private lives are becoming data points used to make decisions about them. What AI does is make this a surveillance ratchet, a device that only goes in one direction, which goes something like this: To make the inferences I want to make to learn more about you, I must collect more data on you. For my AI tools to run, I need data about a lot of you. And once I’ve collected this data, I can monetize it by selling it to others who want to use AI to make other inferences about you. AI creates a demand for data but also becomes the result of collecting data. What makes AI prediction both powerful and lucrative is being able to control what happens next. If a bank can claim to predict what people will do with a loan, it can use that to decide whether they should get one. If an admissions officer can claim to predict how students will perform in college, they can use that to decide which students to admit. Amazon’s Echo devices have been subject to warrants for the audio recordings made by the device inside our homes—recordings that were made even when the people present weren’t talking directly to the device. The desire to surveil is bipartisan. It’s about power, not party politics.

Note: As journalist Kenan Malik put it, "It is not AI but our blindness to the way human societies are already deploying machine intelligence for political ends that should most worry us." Read about the shadowy companies tracking and trading your personal data, which isn't just used to sell products. It's often accessed by governments, law enforcement, and intelligence agencies, often without warrants or oversight. For more, read our concise summaries of news articles on AI.

Beginning in 2004, the CIA established a vast network of at least 885 websites, ranging from Johnny Carson and Star Wars fan pages to online message boards about Rastafari. Spanning 29 languages and targeting at least 36 countries directly, these websites were aimed not only at adversaries such as China, Venezuela, and Russia, but also at allied nations ... showing that the United States treats its friends much like its foes. These websites served as cover for informants, offering some level of plausible deniability if casually examined. Few of these pages provided any unique content and simply rehosted news and blogs from elsewhere. Informants in enemy nations, such as Venezuela, used sites like Noticias-Caracas and El Correo De Noticias to communicate with Langley, while Russian moles used My Online Game Source and TodaysNewsAndWeather-Ru.com, and other similar platforms. In 2010, USAID—a CIA front organization—secretly created the Cuban social media app, Zunzuneo. While the 885 fake websites were not established to influence public opinion, today, the U.S. government sponsors thousands of journalists worldwide for precisely this purpose. The Trump administration’s decision to pause funding to USAID inadvertently exposed a network of more than 6,200 reporters working at nearly 1,000 news outlets or journalism organizations who were all quietly paid to promote pro-U.S. messaging in their countries. Facebook has hired dozens of former CIA officials to run its most sensitive operations. As the platform’s senior misinformation manager, [Aaron Berman] ultimately has the final say over what content is promoted and what is demoted or deleted from Facebook. Until 2019, Berman was a high-ranking CIA officer, responsible for writing the president’s daily security brief.

Note: Dozens of former CIA agents hold top jobs at Google. Learn more about the CIA’s longstanding propaganda network in our comprehensive Military-Intelligence Corruption Information Center. For more along these lines, read our concise summaries of news articles on intelligence agency corruption and media manipulation.

Last April, in a move generating scant media attention, the Air Force announced that it had chosen two little-known drone manufacturers — Anduril Industries of Costa Mesa, California, and General Atomics of San Diego — to build prototype versions of its proposed Collaborative Combat Aircraft (CCA), a future unmanned plane intended to accompany piloted aircraft on high-risk combat missions. The lack of coverage was surprising, given that the Air Force expects to acquire at least 1,000 CCAs over the coming decade at around $30 million each, making this one of the Pentagon’s costliest new projects. But consider that the least of what the media failed to note. In winning the CCA contract, Anduril and General Atomics beat out three of the country’s largest and most powerful defense contractors — Boeing, Lockheed Martin, and Northrop Grumman — posing a severe threat to the continued dominance of the existing military-industrial complex, or MIC. The very notion of a “military-industrial complex” linking giant defense contractors to powerful figures in Congress and the military was introduced on January 17, 1961, by President Dwight D. Eisenhower in his farewell address. In 2024, just five companies — Lockheed Martin (with $64.7 billion in defense revenues), RTX (formerly Raytheon, with $40.6 billion), Northrop Grumman ($35.2 billion), General Dynamics ($33.7 billion), and Boeing ($32.7 billion) — claimed the vast bulk of Pentagon contracts.

Note: For more along these lines, read our concise summaries of news articles on Big Tech and military corruption.

A series of corporate leaks over the past few years provides a remarkable window in the hidden engines powering social media. In January 2021, a few Facebook employees posted an article on the company’s engineering blog purporting to explain the news feed algorithm that determines which of the countless posts available each user will see and the order in which they will see them. Eight months later ... a Facebook product manager turned whistleblower snuck over ten thousand pages of documents and internal messages out of Facebook headquarters. She leaked these to a handful of media outlets. Internal studies documented Instagram’s harmful impact on the mental health of vulnerable teen girls. A secret whitelist program exempted VIP users from the moderation system the rest of us face. It turns out Facebook engineers have assigned a point value to each type of engagement users can perform on a post (liking, commenting, resharing, etc.). For each post you could be shown, these point values are multiplied by the probability that the algorithm thinks you’ll perform that form of engagement. These multiplied pairs of numbers are added up, and the total is the post’s personalized score for you. Facebook, TikTok, and Twitter all run on essentially the same simple math formula. Once we start clicking the social media equivalent of junk food, we’re going to be served up a lot more of it—which makes it harder to resist. It’s a vicious cycle

Note: Read our latest Substack focused on a social media platform that is harnessing technology as a listening tool for the radical purpose of bringing people together across differences. For more along these lines, read our concise summaries of news articles on Big Tech and media manipulation.

Reviewing individuals’ social media to conduct ideological vetting has been a defining initiative of President Trump’s second term. As part of that effort, the administration has proposed expanding the mandatory collection of social media identifiers. By linking individuals’ online presence to government databases, officials could more easily identify, monitor, and penalize people based on their online self-expression, raising the risk of self-censorship. Most recently, the State Department issued a cable directing consular officers to review the social media of all student visa applicants for “any indications of hostility towards the citizens, culture, government, institutions or founding principles of the United States,” as well as for any “history of political activism.” This builds on earlier efforts this term, including the State Department’s “Catch and Revoke” program, which promised to leverage artificial intelligence to screen visa holders’ social media for ostensible “pro-Hamas” activity, and U.S. Citizenship and Immigration Services’ April announcement that it would begin looking for “antisemitic activity” in the social media of scores of foreign nationals. At the border, any traveler, regardless of citizenship status, may face additional scrutiny. U.S. border agents are authorized to ... examine phones, computers, and other devices to review posts and private messages on social media, even if they do not suspect any involvement in criminal activity or have immigration-related concerns.

Note: Our news archives on censorship and the disappearance of privacy reveal how government surveillance of social media has long been conducted by all presidential administrations and all levels of government.

Data brokers are required by California law to provide ways for consumers to request their data be deleted. But good luck finding them. More than 30 of the companies, which collect and sell consumers’ personal information, hid their deletion instructions from Google. This creates one more obstacle for consumers who want to delete their data. Data brokers nationwide must register in California under the state’s Consumer Privacy Act, which allows Californians to request that their information be removed, that it not be sold, or that they get access to it. After reviewing the websites of all 499 data brokers registered with the state, we found 35 had code to stop certain pages from showing up in searches. While those companies might be fulfilling the letter of the law by providing a page consumers can use to delete their data, it means little if those consumers can’t find the page, according to Matthew Schwartz, a policy analyst. “This sounds to me like a clever work-around to make it as hard as possible for consumers to find it,” Schwartz said. Some companies that hid their privacy instructions from search engines included a small link at the bottom of their homepage. Accessing it often required scrolling multiple screens, dismissing pop-ups for cookie permissions and newsletter sign-ups, then finding a link that was a fraction the size of other text on the page. So consumers still faced a serious hurdle when trying to get their information deleted.

Note: For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

Important Note: Explore our full index to revealing excerpts of key major media news stories on several dozen engaging topics. And don't miss amazing excerpts from 20 of the most revealing news articles ever published.