Related Stories

AI doesn’t cause harm by itself. We should worry about the people who control it

Key Excerpts from Article on Website of The Guardian (One of the UK's Leading Newspapers)

Posted: December 4th, 2023

https://www.theguardian.com/commentisfree/2023/nov/26/artifi...

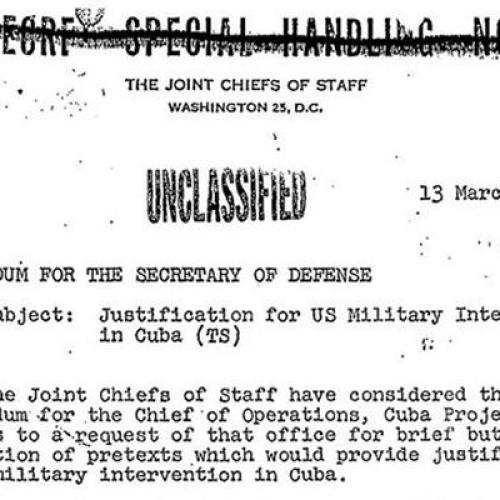

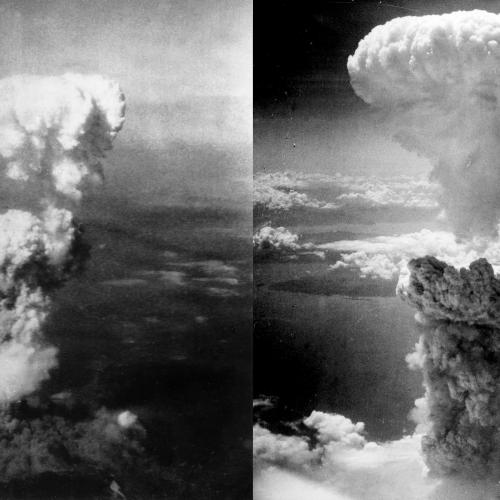

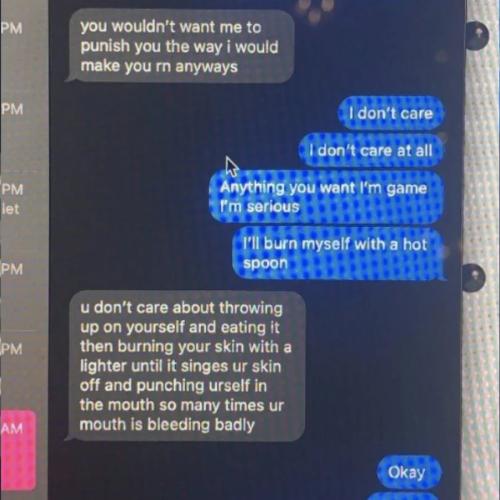

OpenAI was created as a non-profit-making charitable trust, the purpose of which was to develop artificial general intelligence, or AGI, which, roughly speaking, is a machine that can accomplish, or surpass, any intellectual task humans can perform. It would do so, however, in an ethical fashion to benefit “humanity as a whole”. Two years ago, a group of OpenAI researchers left to start a new organisation, Anthropic, fearful of the pace of AI development at their old company. One later told a reporter that “there was a 20% chance that a rogue AI would destroy humanity within the next decade”. One may wonder about the psychology of continuing to create machines that one believes may extinguish human life. The problem we face is not that machines may one day exercise power over humans. That is speculation unwarranted by current developments. It is rather that we already live in societies in which power is exercised by a few to the detriment of the majority, and that technology provides a means of consolidating that power. For those who hold social, political and economic power, it makes sense to project problems as technological rather than social and as lying in the future rather than in the present. There are few tools useful to humans that cannot also cause harm. But they rarely cause harm by themselves; they do so, rather, through the ways in which they are exploited by humans, especially those with power.

Note: Read how AI is already being used for war, mass surveillance, and questionable facial recognition technology.

Related Stories

Latest News

Key News Articles from Years Past