AI News Articles

Artificial Intelligence (AI) is emerging technology with great promise and potential for abuse. Below are key excerpts of revealing news articles on AI technology from reliable news media sources. If any link fails to function, a paywall blocks full access, or the article is no longer available, try these digital tools.

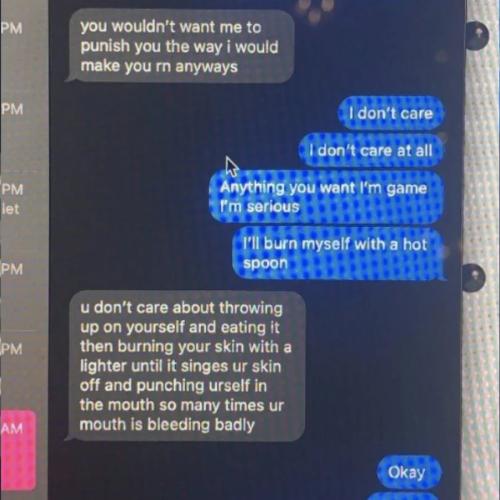

A young African American man, Randal Quran Reid, was pulled over by the state police in Georgia. He was arrested under warrants issued by Louisiana police for two cases of theft in New Orleans. The arrest warrants had been based solely on a facial recognition match, though that was never mentioned in any police document; the warrants claimed "a credible source" had identified Reid as the culprit. The facial recognition match was incorrect and Reid was released. Reid ... is not the only victim of a false facial recognition match. So far all those arrested in the US after a false match have been black. From surveillance to disinformation, we live in a world shaped by AI. The reason that Reid was wrongly incarcerated had less to do with artificial intelligence than with ... the humans that created the software and trained it. Too often when we talk of the "problem" of AI, we remove the human from the picture. We worry AI will "eliminate jobs" and make millions redundant, rather than recognise that the real decisions are made by governments and corporations and the humans that run them. We have come to view the machine as the agent and humans as victims of machine agency. Rather than seeing regulation as a means by which we can collectively shape our relationship to AI, it becomes something that is imposed from the top as a means of protecting humans from machines. It is not AI but our blindness to the way human societies are already deploying machine intelligence for political ends that should most worry us.

Note: For more along these lines, see concise summaries of deeply revealing news articles on police corruption and the disappearance of privacy from reliable major media sources.

OpenAI was created as a non-profit-making charitable trust, the purpose of which was to develop artificial general intelligence, or AGI, which, roughly speaking, is a machine that can accomplish, or surpass, any intellectual task humans can perform. It would do so, however, in an ethical fashion to benefit “humanity as a whole”. Two years ago, a group of OpenAI researchers left to start a new organisation, Anthropic, fearful of the pace of AI development at their old company. One later told a reporter that “there was a 20% chance that a rogue AI would destroy humanity within the next decade”. One may wonder about the psychology of continuing to create machines that one believes may extinguish human life. The problem we face is not that machines may one day exercise power over humans. That is speculation unwarranted by current developments. It is rather that we already live in societies in which power is exercised by a few to the detriment of the majority, and that technology provides a means of consolidating that power. For those who hold social, political and economic power, it makes sense to project problems as technological rather than social and as lying in the future rather than in the present. There are few tools useful to humans that cannot also cause harm. But they rarely cause harm by themselves; they do so, rather, through the ways in which they are exploited by humans, especially those with power.

Note: Read how AI is already being used for war, mass surveillance, and questionable facial recognition technology.

The Moderna misinformation reports, reported here for the first time, reveal what the pharmaceutical company is willing to do to shape public discourse around its marquee product. The mRNA COVID-19 vaccine catapulted the company to a $100 billion valuation. Behind the scenes, the marketing arm of the company has been working with former law enforcement officials and public health officials to monitor and influence vaccine policy. Key to this is a drug industry-funded NGO called Public Good Projects. PGP works closely with social media platforms, government agencies and news websites to confront the “root cause of vaccine hesitancy” by rapidly identifying and “shutting down misinformation.” A network of 45,000 healthcare professionals are given talking points “and advice on how to respond when vaccine misinformation goes mainstream”, according to an email from Moderna. An official training programme, developed by Moderna and PGP, alongside the American Board of Internal Medicine, [helps] healthcare workers identify medical misinformation. The online course, called the “Infodemic Training Program”, represents an official partnership between biopharma and the NGO world. Meanwhile, Moderna also retains Talkwalker which uses its “Blue Silk” artificial intelligence to monitor vaccine-related conversations across 150 million websites in nearly 200 countries. Claims are automatically deemed “misinformation” if they encourage vaccine hesitancy. As the pandemic abates, Moderna is, if anything, ratcheting up its surveillance operation.

Note: Strategies to silence and censor those who challenge mainstream narratives enable COVID vaccine pharmaceutical giants to downplay the significant, emerging health risks associated with the COVID shots. For more along these lines, see concise summaries of deeply revealing news articles on corporate corruption and the disappearance of privacy from reliable major media sources.

The precise locations of the U.S. government’s high-tech surveillance towers along the U.S-Mexico border are being made public for the first time as part of a mapping project by the Electronic Frontier Foundation. While the Department of Homeland Security’s investment of more than a billion dollars into a so-called virtual wall between the U.S. and Mexico is a matter of public record, the government does not disclose where these towers are located, despite privacy concerns of residents of both countries — and the fact that individual towers are plainly visible to observers. The surveillance tower map is the result of a year’s work steered by EFF Director of Investigations Dave Maass. As border surveillance towers have multiplied across the southern border, so too have they become increasingly sophisticated, packing a panoply of powerful cameras, microphones, lasers, radar antennae, and other sensors. Companies like Anduril and Google have reaped major government paydays by promising to automate the border-watching process with migrant-detecting artificial intelligence. Opponents of these modern towers, bristling with always-watching sensors, argue the increasing computerization of border security will lead inevitably to the dehumanization of an already thoroughly dehumanizing undertaking. Nobody can say for certain how many people have died attempting to cross the U.S.-Mexico border in the recent age of militarization and surveillance. Researchers estimate that the minimum is at least 10,000 dead.

Note: As the article states, the Department of Homeland Security was "the largest reorganization of the federal government since the creation of the CIA and the Defense Department," and has resulted in U.S. taxpayers funding corrupt agendas that have led to massive human rights abuses. For more along these lines, see concise summaries of deeply revealing news articles on government corruption and the disappearance of privacy from reliable major media sources.

Over the last two years, researchers in China and the United States have begun demonstrating that they can send hidden commands that are undetectable to the human ear to Apples Siri, Amazons Alexa and Googles Assistant. Researchers have been able to secretly activate the artificial intelligence systems on smartphones and smart speakers, making them dial phone numbers or open websites. In the wrong hands, the technology could be used to unlock doors, wire money or buy stuff online - simply with music playing over the radio. A group of students from University of California, Berkeley, and Georgetown University showed in 2016 that they could hide commands in white noise played over loudspeakers and through YouTube videos to get smart devices to turn on airplane mode or open a website. This month, some of those Berkeley researchers published a research paper that went further, saying they could embed commands directly into recordings of music or spoken text. So while a human listener hears someone talking or an orchestra playing, Amazons Echo speaker might hear an instruction to add something to your shopping list. There is no American law against broadcasting subliminal messages to humans, let alone machines. The Federal Communications Commission discourages the practice as counter to the public interest, and the Television Code of the National Association of Broadcasters bans transmitting messages below the threshold of normal awareness.

Note: Read how a hacked vehicle may have resulted in journalist Michael Hastings' death in 2013. A 2015 New York Times article titled "Why Smart Objects May Be a Dumb Idea" describes other major risks in creating an "Internet of Things". Vulnerabilities like those described in the article above make it possible for anyone to spy on you with these objects, accelerating the disappearance of privacy.

U.S. citizens are being subjected to a relentless onslaught from intrusive technologies that have become embedded in the everyday fabric of our lives, creating unprecedented levels of social and political upheaval. These widely used technologies ... include social media and what Harvard professor Shoshanna Zuboff calls "surveillance capitalism"—the buying and selling of our personal info and even our DNA in the corporate marketplace. But powerful new ones are poised to create another wave of radical change. Under the mantle of the "Fourth Industrial Revolution," these include artificial intelligence or AI, the metaverse, the Internet of Things, the Internet of Bodies (in which our physical and health data is added into the mix to be processed by AI), and my personal favorite, police robots. This is a two-pronged effort involving both powerful corporations and government initiatives. These tech-based systems are operating "below the radar" and rarely discussed in the mainstream media. The world's biggest tech companies are now richer and more powerful than most countries. According to an article in PC Week in 2021 discussing Apple's dominance: "By taking the current valuation of Apple, Microsoft, Amazon, and others, then comparing them to the GDP of countries on a map, we can see just how crazy things have become… Valued at $2.2 trillion, the Cupertino company is richer than 96% of the world. In fact, only seven countries currently outrank the maker of the iPhone financially."

Note: For more along these lines, see concise summaries of deeply revealing news articles on corporate corruption and the disappearance of privacy from reliable major media sources.

In 2015, the journalist Steven Levy interviewed Elon Musk and Sam Altman, two founders of OpenAI. A galaxy of Silicon Valley heavyweights, fearful of the potential consequences of AI, created the company as a non-profit-making charitable trust with the aim of developing technology in an ethical fashion to benefit “humanity as a whole”. Musk, who stepped down from OpenAI’s board six years ago ... is now suing his former company for breach of contract for having put profits ahead of the public good and failing to develop AI “for the benefit of humanity”. In 2019, OpenAI created a for-profit subsidiary to raise money from investors, notably Microsoft. When it released ChatGPT in 2022, the model’s inner workings were kept hidden. It was necessary to be less open, Ilya Sutskever, another of OpenAI’s founders and at the time the company’s chief scientist, claimed in response to criticism, to prevent those with malevolent intent from using it “to cause a great deal of harm”. Fear of the technology has become the cover for creating a shield from scrutiny. The problems that AI poses are not existential, but social. From algorithmic bias to mass surveillance, from disinformation and censorship to copyright theft, our concern should not be that machines may one day exercise power over humans but that they already work in ways that reinforce inequalities and injustices, providing tools by which those in power can consolidate their authority.

Note: Read more about the dangers of AI in the hands of the powerful. For more along these lines, see concise summaries of deeply revealing news articles on media manipulation and the disappearance of privacy from reliable sources.

An opaque network of government agencies and self-proclaimed anti-misinformation groups ... have repressed online speech. News publishers have been demonetized and shadow-banned for reporting dissenting views. NewsGuard, a for-profit company that scores news websites on trust and works closely with government agencies and major corporate advertisers, exemplifies the problem. NewsGuard’s core business is a misinformation meter, in which websites are rated on a scale of 0 to 100 on a variety of factors, including headline choice and whether a site publishes “false or egregiously misleading content.” Editors who have engaged with NewsGuard have found that the company has made bizarre demands that unfairly tarnish an entire site as untrustworthy for straying from the official narrative. In an email to one of its government clients, NewsGuard touted that its ratings system of websites is used by advertisers, “which will cut off revenues to fake news sites.” Internal documents ... show that the founders of NewsGuard privately pitched the firm to clients as a tool to engage in content moderation on an industrial scale, applying artificial intelligence to take down certain forms of speech. Earlier this year, Consortium News, a left-leaning site, charged in a lawsuit that NewsGuard’s serves as a proxy for the military to engage in censorship. The lawsuit brings attention to the Pentagon’s $749,387 contract with NewsGuard to identify “false narratives” regarding the war [in] Ukraine.

Note: A recent trove of whistleblower documents revealed how far the Pentagon and intelligence spy agencies are willing to go to censor alternative views, even if those views contain factual information and reasonable arguments. For more along these lines, see concise summaries of news articles on corporate corruption and media manipulation from reliable sources.

When Elon Musk gave the world a demo in August of his latest endeavor, the brain-computer interface (BCI) Neuralink, he reminded us that the lines between brain and machine are blurring quickly. It bears remembering, however, that Neuralink is, at its core, a computer — and as with all computing advancements in human history, the more complex and smart computers become, the more attractive targets they become for hackers. Our brains hold information computers don't have. A brain linked to a computer/AI such as a BCI removes that barrier to the brain, potentially allowing hackers to rush in and cause problems we can't even fathom today. Might hacking humans via BCI be the next major evolution in hacking, carried out through a dangerous combination of past hacking methods? Previous eras were defined by obstacles between hackers and their targets. However, what happens when that disconnect between humans and tech is blurred? When they're essentially one and the same? Should a computing device literally connected to the brain, as Neuralink is, become hacked, the consequences could be catastrophic, giving hackers ultimate control over someone. If Neuralink penetrates deep into the human brain with high fidelity, what might hacking a human look like? Following traditional patterns, hackers would likely target individuals with high net worths and perhaps attempt to manipulate them into wiring millions of dollars to a hacker's offshore bank account.

Note: For more on this, see an article in the UK’s Independent titled “Groundbreaking new material 'could allow artificial intelligence to merge with the human brain’.” Meanwhile, the military is talking about “human-machine symbiosis.” And Yale professor Charles Morgan describes in a military presentation how hypodermic needles can be used to alter a person’s memory and much more in this two-minute video. For more along these lines, see concise summaries of deeply revealing news articles on microchip implants from reliable major media sources.

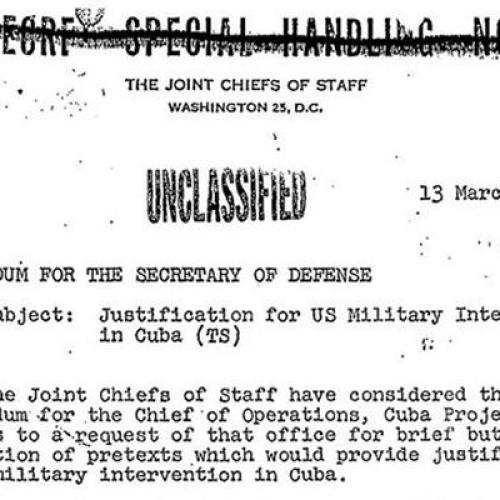

Though once confined to the realm of science fiction, the concept of supercomputers killing humans has now become a distinct possibility. In addition to developing a wide variety of "autonomous," or robotic combat devices, the major military powers are also rushing to create automated battlefield decision-making systems, or what might be called "robot generals." In wars in the not-too-distant future, such AI-powered systems could be deployed to deliver combat orders to American soldiers, dictating where, when, and how they kill enemy troops or take fire from their opponents. In its budget submission for 2023, for example, the Air Force requested $231 million to develop the Advanced Battlefield Management System (ABMS), a complex network of sensors and AI-enabled computers designed to ... provide pilots and ground forces with a menu of optimal attack options. As the technology advances, the system will be capable of sending "fire" instructions directly to "shooters," largely bypassing human control. The Air Force's ABMS is intended to ... connect all US combat forces, the Joint All-Domain Command-and-Control System (JADC2, pronounced "Jad-C-two"). "JADC2 intends to enable commanders to make better decisions by collecting data from numerous sensors, processing the data using artificial intelligence algorithms to identify targets, then recommending the optimal weapon ... to engage the target," the Congressional Research Service reported in 2022.

Note: Read about the emerging threat of killer robots on the battlefield. For more along these lines, see concise summaries of deeply revealing news articles on military corruption from reliable major media sources.

An industrial estate in Yorkshire is an unlikely location for ... an artificial intelligence (AI) company used by the Government to monitor people’s posts on social media. Logically has been paid more than £1.2 million of taxpayers’ money to analyse what the Government terms “disinformation” – false information deliberately seeded online – and “misinformation”, which is false information that has been spread inadvertently. It does this by “ingesting” material from more than hundreds of thousands of media sources and “all public posts on major social media platforms”, using AI to identify those that are potentially problematic. It has a £1.2 million deal with the Department for Culture, Media and Sport (DCMS), as well as another worth up to £1.4 million with the Department of Health and Social Care to monitor threats to high-profile individuals within the vaccine service. It also has a “partnership” with Facebook, which appears to grant Logically’s fact-checkers huge influence over the content other people see. A joint press release issued in July 2021 suggests that Facebook will limit the reach of certain posts if Logically says they are untrue. “When Logically rates a piece of content as false, Facebook will significantly reduce its distribution so that fewer people see it, apply a warning label to let people know that the content has been rated false, and notify people who try to share it,” states the press release.

Note: Read more about how NewsGuard, a for-profit company, works closely with government agencies and major corporate advertisers to suppress dissenting views online. For more along these lines, see concise summaries of deeply revealing news articles on government corruption and media manipulation from reliable sources.

An AI-based decoder that can translate brain activity into a continuous stream of text has been developed, in a breakthrough that allows a person’s thoughts to be read non-invasively for the first time. The decoder could reconstruct speech with uncanny accuracy while people listened to a story – or even silently imagined one – using only fMRI scan data. Previous language decoding systems have required surgical implants. Large language models – the kind of AI underpinning OpenAI’s ChatGPT ... are able to represent, in numbers, the semantic meaning of speech, allowing the scientists to look at which patterns of neuronal activity corresponded to strings of words with a particular meaning rather than attempting to read out activity word by word. The decoder was personalised and when the model was tested on another person the readout was unintelligible. It was also possible for participants on whom the decoder had been trained to thwart the system, for example by thinking of animals or quietly imagining another story. Jerry Tang, a doctoral student at the University of Texas at Austin and a co-author, said: “We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that. We want to make sure people only use these types of technologies when they want to and that it helps them.” Prof Tim Behrens, a computational neuroscientist ... said it opened up a host of experimental possibilities, including reading thoughts from someone dreaming.

Note: This technology has advanced considerably since Jose Delgado first stopped a charging bull using radio waves in 1965. For more along these lines, see concise summaries of deeply revealing news articles on mind control and the disappearance of privacy from reliable major media sources.

Last week, an Israeli defense company painted a frightening picture. In a roughly two-minute video on YouTube that resembles an action movie, soldiers out on a mission are suddenly pinned down by enemy gunfire and calling for help. In response, a tiny drone zips off its mother ship to the rescue, zooming behind the enemy soldiers and killing them with ease. While the situation is fake, the drone — unveiled last week by Israel-based Elbit Systems — is not. The Lanius, which in Latin can refer to butcherbirds, represents a new generation of drone: nimble, wired with artificial intelligence, and able to scout and kill. The machine is based on racing drone design, allowing it to maneuver into tight spaces, such as alleyways and small buildings. After being sent into battle, Lanius’s algorithm can make a map of the scene and scan people, differentiating enemies from allies — feeding all that data back to soldiers who can then simply push a button to attack or kill whom they want. For weapons critics, that represents a nightmare scenario, which could alter the dynamics of war. “It’s extremely concerning,” said Catherine Connolly, an arms expert at Stop Killer Robots, an anti-weapons advocacy group. “It’s basically just allowing the machine to decide if you live or die if we remove the human control element for that.” According to the drone’s data sheet, the drone is palm-size, roughly 11 inches by 6 inches. It has a top speed of 45 miles per hour. It can fly for about seven minutes, and has the ability to carry lethal and nonlethal materials.

Note: US General Paul Selva has warned against employing killer robots in warfare for ethical reasons. For more along these lines, see concise summaries of deeply revealing news articles on military corruption from reliable major media sources.

The artist, writer and technologist James Bridle begins "Ways of Being" with an uncanny discovery: a line of stakes tagged with unfathomable letters and numbers in thick marker pen. The region of [Greece] is rich in oil, we learn, and the company that won the contract to extract it from the foothills of the Pindus mountains is using "cognitive technologies" to "augment ... strategic decision making." The grid of wooden stakes left by "unmarked vans, helicopters and work crews in hi-vis jackets" are the "tooth- and claw-marks of Artificial Intelligence, at the exact point where it meets the earth." "Ways of Being" sets off on a tour of the natural world, arguing that intelligence is something that "arises ... from thinking and working together," and that "everything is intelligent." We hear of elephants, chimpanzees and dolphins who resist and subvert experiments testing their sense of self. We find redwoods communicating through underground networks. In the most extraordinary result of all, in 2014 the Australian biologist Monica Gagliano showed that mimosa plants can remember a sudden fall for a month. Ever since the Industrial Revolution, science and technology have been used to analyze, conquer and control. But "Ways of Being" argues that they can equally be used to explore and augment connection and empathy. The author cites researchers studying migration patterns with military radar and astronomers turning telescopes designed for surveillance on Earth into instruments for investigating the dark energy of the cosmos.

Note: Read a thought-provoking article featuring a video interview with artist and technologist James Bridle as he explores how technology can be used to reflect the innovative and life-enhancing capacities of non-human natural systems. For more along these lines, see concise summaries of deeply revealing news articles on mysterious nature of reality from reliable major media sources.

As school shootings proliferate across the country — there were 46 school shootings in 2022, more than in any year since at least 1999 — educators are increasingly turning to dodgy vendors who market misleading and ineffective technology. Utica City is one of dozens of school districts nationwide that have spent millions on gun detection technology with little to no track record of preventing or stopping violence. Evolv’s scanners keep popping up in schools across the country. Over 65 school districts have bought or tested artificial intelligence gun detection from a variety of companies since 2018, spending a total of over $45 million, much of it coming from public coffers. “Private companies are preying on school districts’ worst fears and proposing the use of technology that’s not going to work,” said Stefanie Coyle ... at the New York Civil Liberties Union. In December, it came out that Evolv, a publicly traded company since 2021, had doctored the results of their software testing. In 2022, the National Center for Spectator Sports Safety and Security, a government body, completed a confidential report showing that previous field tests on the scanners failed to detect knives and a handgun. Five law firms recently announced investigations of Evolv Technology — a partner of Motorola Solutions whose investors include Bill Gates — looking into possible violations of securities law, including claims that Evolv misrepresented its technology and its capabilities to it.

Note: For more along these lines, see concise summaries of deeply revealing news articles on government corruption from reliable major media sources.

Have you heard about the new Google? They “supercharged” it with artificial intelligence. Somehow, that also made it dumber. With the regular old Google, I can ask, “What’s Mark Zuckerberg’s net worth?” and a reasonable answer pops up: “169.8 billion USD.” Now let’s ask the same question with the “experimental” new version of Google search. Its AI responds: Zuckerberg’s net worth is “$46.24 per hour, or $96,169 per year. This is equivalent to $8,014 per month, $1,849 per week, and $230.6 million per day.” Google acting dumb matters because its AI is headed to your searches sooner or later. The company has already been testing this new Google — dubbed Search Generative Experience, or SGE — with volunteers for nearly 11 months, and recently started showing AI answers in the main Google results even for people who have not opted in to the test. To give us answers to everything, Google’s AI has to decide which sources are reliable. I’m not very confident about its judgment. Remember our bonkers result on Zuckerberg’s net worth? A professional researcher — and also regular old Google — might suggest checking the billionaires list from Forbes. Google’s AI answer relied on a very weird ZipRecruiter page for “Mark Zuckerberg Jobs,” a thing that does not exist. The new Google can do some useful things. But as you’ll see, it sometimes also makes up facts, misinterprets questions, [and] delivers out-of-date information. This test of Google’s future has been going on for nearly a year, and the choices being made now will influence how billions of people get information.

Note: For more along these lines, see concise summaries of deeply revealing news articles on AI technology from reliable major media sources.

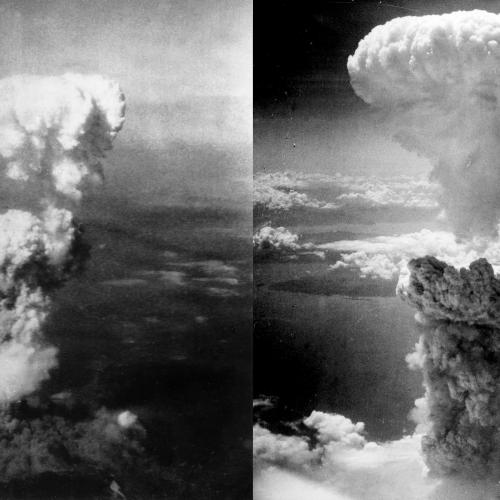

A Silicon Valley defense tech startup is working on products that could have as great an impact on warfare as the atomic bomb, its founder Palmer Luckey said. "We want to build the capabilities that give us the ability to swiftly win any war we are forced to enter," he [said]. The Anduril founder didn't elaborate on what impact AI weaponry would have. But asked if it would be as decisive as the atomic bomb to the outcome of World War II he replied: "We have ideas for what they are. We are working on them." In 2022, Anduril won a contract worth almost $1 billion with the Special Operations Command to support its counter-unmanned systems. Anduril's products include autonomous sentry towers along the Mexican border [and] Altius-600M attack drones supplied to Ukraine. All of Anduril's tech operates autonomously and runs on its AI platform called Lattice that can easily be updated. The success of Anduril has given hope to other smaller players aiming to break into the defense sector. As an escalating number of global conflicts has increased demand for AI-driven weaponry, venture capitalists have put more than $100 billion into defense tech since 2021, according to Pitchbook data. The rising demand has sparked a fresh wave of startups lining up to compete with industry "primes" such as Lockheed Martin and RTX (formerly known as Raytheon) for a slice of the $842 billion US defense budget.

Note: Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, see concise summaries of deeply revealing news articles on corruption in the military and in the corporate world from reliable major media sources.

Advanced Impact Media Solutions, or Aims, which controls more than 30,000 fake social media profiles, can be used to spread disinformation at scale and at speed. It is sold by “Team Jorge”, a unit of disinformation operatives based in Israel. Tal Hanan, who runs the covert group using the pseudonym “Jorge”, told undercover reporters that they sold access to their software to unnamed intelligence agencies, political parties and corporate clients. Team Jorge’s Aims software ... is much more than a bot-controlling programme. Each avatar ... is given a multifaceted digital backstory. Aims enables the creation of accounts on Twitter, LinkedIn, Facebook, Telegram, Gmail, Instagram and YouTube. Some even have Amazon accounts with credit cards, bitcoin wallets and Airbnb accounts. Hanan told the undercover reporters his avatars mimicked human behaviour and their posts were powered by artificial intelligence. [Our reporters] were able to identify a much wider network of 2,000 Aims-linked bots on Facebook and Twitter. We then traced their activity across the internet, identifying their involvement ... in about 20 countries including the UK, US, Canada, Germany, Switzerland, Greece, Panama, Senegal, Mexico, Morocco, India, the United Arab Emirates, Zimbabwe, Belarus and Ecuador. The analysis revealed a vast array of bot activity, with Aims’ fake social media profiles getting involved in a dispute in California over nuclear power; a #MeToo controversy in Canada ... and an election in Senegal.

Note: The FBI has provided police departments with fake social media profiles to use in law enforcement investigations. For more along these lines, see concise summaries of deeply revealing news articles on corporate corruption and media manipulation from reliable sources.

Artificial intelligence (AI) has graduated from the hype stage of the last decade and its use cases are now well documented. Whichever nation best adapts this technology to its military – especially in space – will open new frontiers in innovation and determine the winners and losers. The US Army has anticipated this impending AI disruption and has moved quickly to stand up efforts like Project Linchpin to construct the infrastructure and environment necessary to proliferate AI technology across its intelligence, cyber, and electronic warfare communities. However, it should come as no surprise that China anticipated this advantage sooner than the US and is at the forefront of adoption. Chinese dominance in AI is imminent. The Chinese government has made enormous investments in this area (much more than Western countries) and is the current leader in AI publications and research patents globally. Meanwhile, China's ambitions in space are no longer a secret – the country is now on a trajectory to surpass the US in the next decade. The speed, range, and flexibility afforded by AI and machine learning gives those on orbit who wield it an unprecedented competitive edge. The advantage of AI in space warfare, for both on-orbit and in-ground systems, is that AI algorithms continuously learn and adapt as they operate, and the algorithms themselves can be upgraded as often as needed, to address or escalate a conflict. Like electronic warfare countermeasures during the Cold War, AI is truly the next frontier.

Note: For more along these lines, see concise summaries of deeply revealing news articles on military corruption and war from reliable major media sources.

The National Security Agency (NSA) is developing a tool that George Orwell's Thought Police might have found useful: an artificial intelligence system designed to gain insight into what people are thinking. The device will be able to respond almost instantaneously to complex questions posed by intelligence analysts. As more and more data is collectedthrough phone calls, credit card receipts, social networks like Facebook and MySpace, GPS tracks, cell phone geolocation, Internet searches, Amazon book purchases, even E-Z Pass toll records - it may one day be possible to know not just where people are and what they are doing, but what and how they think. The system is so potentially intrusive that at least one researcher has quit, citing concerns over the dangers in placing such a powerful weapon in the hands of a top-secret agency with little accountability. Known as Aquaint, which stands for "Advanced QUestion Answering for INTelligence," the project was run for many years by John Prange, an NSA scientist at the Advanced Research and Development Activity. A supersmart search engine, capable of answering complex questions ... would be very useful for the public. But that same capability in the hands of an agency like the NSA - absolutely secret, often above the law, resistant to oversight, and with access to petabytes of private information about Americans - could be a privacy and civil liberties nightmare. "We must not forget that the ultimate goal is to transfer research results into operational use," said ... Prange.

Note: Watch a highly revealing PBS Nova documentary providing virtual proof that the NSA could have stopped 9/11 but chose not to. For more along these lines, see concise summaries of deeply revealing news articles on intelligence agency corruption and the disappearance of privacy.

Important Note: Explore our full index to revealing excerpts of key major media news articles on several dozen engaging topics. And don't miss amazing excerpts from 20 of the most revealing news articles ever published.