Related Stories

‘I’m afraid I can’t do that’: Should killer robots be allowed to disobey orders?

Key Excerpts from Article on Website of Bulletin of the Atomic Scientists

Posted: December 27th, 2024

https://thebulletin.org/2024/08/im-afraid-i-cant-do-that-sho...

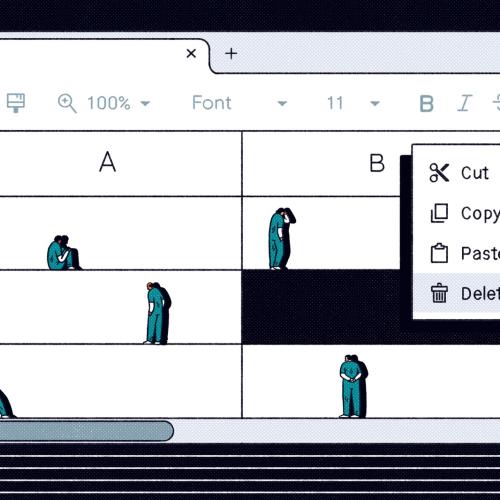

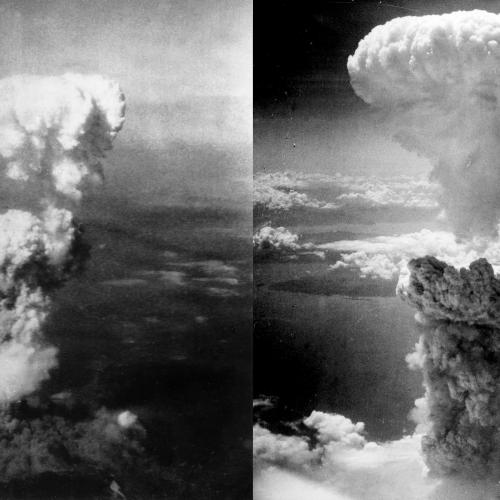

It is often said that autonomous weapons could help minimize the needless horrors of war. Their vision algorithms could be better than humans at distinguishing a schoolhouse from a weapons depot. Some ethicists have long argued that robots could even be hardwired to follow the laws of war with mathematical consistency. And yet for machines to translate these virtues into the effective protection of civilians in war zones, they must also possess a key ability: They need to be able to say no. Human control sits at the heart of governments’ pitch for responsible military AI. Giving machines the power to refuse orders would cut against that principle. Meanwhile, the same shortcomings that hinder AI’s capacity to faithfully execute a human’s orders could cause them to err when rejecting an order. Militaries will therefore need to either demonstrate that it’s possible to build ethical, responsible autonomous weapons that don’t say no, or show that they can engineer a safe and reliable right-to-refuse that’s compatible with the principle of always keeping a human “in the loop.” If they can’t do one or the other ... their promises of ethical and yet controllable killer robots should be treated with caution. The killer robots that countries are likely to use will only ever be as ethical as their imperfect human commanders. They would only promise a cleaner mode of warfare if those using them seek to hold themselves to a higher standard.

Note: Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on AI and military corruption.

Related Stories

Latest News

Key News Articles from Years Past