AI News Stories

Artificial Intelligence (AI) is emerging technology with great promise and potential for abuse. Below are key excerpts of revealing news articles on AI technology from reliable news media sources. If any link fails to function, a paywall blocks full access, or the article is no longer available, try these digital tools.

AI could mean fewer body bags on the battlefield — but that's exactly what terrifies the godfather of AI. Geoffrey Hinton, the computer scientist known as the "godfather of AI," said the rise of killer robots won't make wars safer. It will make conflicts easier to start by lowering the human and political cost of fighting. Hinton said ... that "lethal autonomous weapons, that is weapons that decide by themselves who to kill or maim, are a big advantage if a rich country wants to invade a poor country." "The thing that stops rich countries invading poor countries is their citizens coming back in body bags," he said. "If you have lethal autonomous weapons, instead of dead people coming back, you'll get dead robots coming back." That shift could embolden governments to start wars — and enrich defense contractors in the process, he said. Hinton also said AI is already reshaping the battlefield. "It's fairly clear it's already transformed warfare," he said, pointing to Ukraine as an example. "A $500 drone can now destroy a multimillion-dollar tank." Traditional hardware is beginning to look outdated, he added. "Fighter jets with people in them are a silly idea now," Hinton said. "If you can have AI in them, AIs can withstand much bigger accelerations — and you don't have to worry so much about loss of life." One Ukrainian soldier who works with drones and uncrewed systems [said] in a February report that "what we're doing in Ukraine will define warfare for the next decade."

Note: As law expert Dr. Salah Sharief put it, "The detached nature of drone warfare has anonymized and dehumanized the enemy, greatly diminishing the necessary psychological barriers of killing." For more, read our concise summaries of news articles on AI and warfare technology.

“Ice is just around the corner,” my friend said, looking up from his phone. A day earlier, I had met with foreign correspondents at the United Nations to explain the AI surveillance architecture that Immigration and Customs Enforcement (Ice) is using across the United States. The law enforcement agency uses targeting technologies which one of my past employers, Palantir Technologies, has both pioneered and proliferated. Technology like Palantir’s plays a major role in world events, from wars in Iran, Gaza and Ukraine to the detainment of immigrants and dissident students in the United States. Known as intelligence, surveillance, target acquisition and reconnaissance (Istar) systems, these tools, built by several companies, allow users to track, detain and, in the context of war, kill people at scale with the help of AI. They deliver targets to operators by combining immense amounts of publicly and privately sourced data to detect patterns, and are particularly helpful in projects of mass surveillance, forced migration and urban warfare. Also known as “AI kill chains”, they pull us all into a web of invisible tracking mechanisms that we are just beginning to comprehend, yet are starting to experience viscerally in the US as Ice wields these systems near our homes, churches, parks and schools. The dragnets powered by Istar technology trap more than migrants and combatants ... in their wake. They appear to violate first and fourth amendment rights.

Note: Read how Palantir helped the NSA and its allies spy on the entire planet. Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on AI and Big Tech.

In July, US group Delta Air Lines revealed that approximately 3 percent of its domestic fare pricing is determined using artificial intelligence (AI) – although it has not elaborated on how this happens. The company said it aims to increase this figure to 20 percent by the end of this year. According to former Federal Trade Commission Chair Lina Khan ... some companies are able to use your personal data to predict what they know as your “pain point” – the maximum amount you’re willing to spend. In January, the US’s Federal Trade Commission (FTC), which regulates fair competition, reported on a surveillance pricing study it carried out in July 2024. It found that companies can collect data directly through account registrations, email sign-ups and online purchases in order to do this. Additionally, web pixels installed by intermediaries track digital signals including your IP address, device type, browser information, language preferences and “granular” website interactions such as mouse movements, scrolling patterns and video viewing behaviour. This is known as “surveillance pricing”. The FTC Surveillance Pricing report lists several ways in which consumers can protect their data. These include using private browsers to do your online shopping, opting out of consumer tracking where possible, clearing the cookies in your history or using virtual private networks (VPNs) to shield your data from being collected.

Note: For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

Larry Ellison, the billionaire cofounder of Oracle ... said AI will usher in a new era of surveillance that he gleefully said will ensure "citizens will be on their best behavior." Ellison made the comments as he spoke to investors earlier this week during an Oracle financial analysts meeting, where he shared his thoughts on the future of AI-powered surveillance tools. Ellison said AI would be used in the future to constantly watch and analyze vast surveillance systems, like security cameras, police body cameras, doorbell cameras, and vehicle dashboard cameras. "We're going to have supervision," Ellison said. "Every police officer is going to be supervised at all times, and if there's a problem, AI will report that problem and report it to the appropriate person. Citizens will be on their best behavior because we are constantly recording and reporting everything that's going on." Ellison also expects AI drones to replace police cars in high-speed chases. "You just have a drone follow the car," Ellison said. "It's very simple in the age of autonomous drones." Ellison's company, Oracle, like almost every company these days, is aggressively pursuing opportunities in the AI industry. It already has several projects in the works, including one in partnership with Elon Musk's SpaceX. Ellison is the world's sixth-richest man with a net worth of $157 billion.

Note: As journalist Kenan Malik put it, "The problem we face is not that machines may one day exercise power over humans. It is rather that we already live in societies in which power is exercised by a few to the detriment of the majority, and that technology provides a means of consolidating that power." Read about the shadowy companies tracking and trading your personal data, which isn't just used to sell products. It's often accessed by governments, law enforcement, and intelligence agencies, often without warrants or oversight. For more along these lines, read our concise summaries of news articles on Big Tech and the disappearance of privacy.

AI’s promise of behavior prediction and control fuels a vicious cycle of surveillance which inevitably triggers abuses of power. The problem with using data to make predictions is that the process can be used as a weapon against society, threatening democratic values. As the lines between private and public data are blurred in modern society, many won’t realize that their private lives are becoming data points used to make decisions about them. What AI does is make this a surveillance ratchet, a device that only goes in one direction, which goes something like this: To make the inferences I want to make to learn more about you, I must collect more data on you. For my AI tools to run, I need data about a lot of you. And once I’ve collected this data, I can monetize it by selling it to others who want to use AI to make other inferences about you. AI creates a demand for data but also becomes the result of collecting data. What makes AI prediction both powerful and lucrative is being able to control what happens next. If a bank can claim to predict what people will do with a loan, it can use that to decide whether they should get one. If an admissions officer can claim to predict how students will perform in college, they can use that to decide which students to admit. Amazon’s Echo devices have been subject to warrants for the audio recordings made by the device inside our homes—recordings that were made even when the people present weren’t talking directly to the device. The desire to surveil is bipartisan. It’s about power, not party politics.

Note: As journalist Kenan Malik put it, "It is not AI but our blindness to the way human societies are already deploying machine intelligence for political ends that should most worry us." Read about the shadowy companies tracking and trading your personal data, which isn't just used to sell products. It's often accessed by governments, law enforcement, and intelligence agencies, often without warrants or oversight. For more, read our concise summaries of news articles on AI.

In Silicon Valley, AI tech giants are in a bidding war, competing to hire the best and brightest computer programmers. But a different hiring spree is underway in D.C. AI firms are on an influence-peddling spree, hiring hundreds of former government officials and retaining former members of Congress as consultants and lobbyists. The latest disclosure filings show over 500 entities lobbying on AI policy—from federal rules designed to preempt state and local safety regulations to water and energy-intensive data centers and integration into government contracting and certifications. Lawmakers are increasingly making the jump from serving constituents as elected officials to working directly as influence peddlers for AI interests. Former Sen. Laphonza Butler, D-Calif., a former lobbyist appointed to the U.S. Senate to fill the seat of Sen. Dianne Feinstein, left Congress last year and returned to her former profession. She is now working as a consultant to OpenAI, the firm behind ChatGPT. Former Sen. Richard Burr, R-N.C., recently registered for the first time as a lobbyist. Among his initial clients is Lazarus AI, which sells AI products to the Defense Department. The expanding reach of artificial intelligence is rapidly reshaping hundreds of professions, weapons of war, and the ways we connect with one another. What's clear is that the AI firms set to benefit most from these changes are taking control of the policymaking apparatus to write the laws and regulations during the transition.

Note: For more, read our concise summaries of news articles on AI and Big Tech.

Health practitioners are becoming increasingly uneasy about the medical community making widespread use of error-prone generative AI tools. In their May 2024 research paper introducing a healthcare AI model, dubbed Med-Gemini, Google researchers showed off the AI analyzing brain scans from the radiology lab for various conditions. It identified an "old left basilar ganglia infarct," referring to a purported part of the brain — "basilar ganglia" — that simply doesn't exist in the human body. Board-certified neurologist Bryan Moore flagged the issue ... highlighting that Google fixed its blog post about the AI — but failed to revise the research paper itself. The AI likely conflated the basal ganglia, an area of the brain that's associated with motor movements and habit formation, and the basilar artery, a major blood vessel at the base of the brainstem. Google blamed the incident on a simple misspelling of "basal ganglia." It's an embarrassing reveal that underlines persistent and impactful shortcomings of the tech. In Google's search results, this can lead to headaches for users during their research and fact-checking efforts. But in a hospital setting, those kinds of slip-ups could have devastating consequences. While Google's faux pas more than likely didn't result in any danger to human patients, it sets a worrying precedent, experts argue. In a medical context, AI hallucinations could easily lead to confusion and potentially even put lives at risk.

Note: For more along these lines, read our concise summaries of news articles on AI and corruption in science.

U.S. Customs and Border Protection, flush with billions in new funding, is seeking “advanced AI” technologies to surveil urban residential areas, increasingly sophisticated autonomous systems, and even the ability to see through walls. A CBP presentation for an “Industry Day” summit with private sector vendors ... lays out a detailed wish list of tech CBP hopes to purchase. State-of-the-art, AI-augmented surveillance technologies will be central to the Trump administration’s anti-immigrant campaign, which will extend deep into the interior of the North American continent. [A] reference to AI-aided urban surveillance appears on a page dedicated to the operational needs of Border Patrol’s “Coastal AOR,” or area of responsibility, encompassing the entire southeast of the United States. “In the best of times, oversight of technology and data at DHS is weak and has allowed profiling, but in recent months the administration has intentionally further undermined DHS accountability,” explained [Spencer Reynolds, a former attorney with the Department of Homeland Security]. “Artificial intelligence development is opaque, even more so when it relies on private contractors that are unaccountable to the public — like those Border Patrol wants to hire. Injecting AI into an environment full of biased data and black-box intelligence systems will likely only increase risk and further embolden the agency’s increasingly aggressive behavior.”

Note: For more along these lines, read our concise summaries of news articles on AI and immigration enforcement corruption.

The fusion of artificial intelligence (AI) and blockchain technology has generated excitement, but both fields face fundamental limitations that can’t be ignored. What if these two technologies, each revolutionary in its own right, could solve each other’s greatest weaknesses? Imagine a future where blockchain networks are seamlessly efficient and scalable, thanks to AI’s problem-solving prowess, and where AI applications operate with full transparency and accountability by leveraging blockchain’s immutable record-keeping. This vision is taking shape today through a new wave of decentralized AI projects. Leading the charge, platforms like SingularityNET, Ocean Protocol, and Fetch.ai are showing how a convergence of AI and blockchain could not only solve each other’s biggest challenges but also redefine transparency, user control, and trust in the digital age. While AI’s potential is revolutionary, its centralized nature and opacity create significant concerns. Blockchain’s decentralized, immutable structure can address these issues, offering a pathway for AI to become more ethical, transparent, and accountable. Today, AI models rely on vast amounts of data, often gathered without full user consent. Blockchain introduces a decentralized model, allowing users to retain control over their data while securely sharing it with AI applications. This setup empowers individuals to manage their data’s use and fosters a safer, more ethical digital environment.

Note: Watch our 13 minute video on the promise of blockchain technology. Explore more positive stories like this on reimagining the economy and technology for good.

The forensic scientist Claire Glynn estimated that more than 40 million people have sent in their DNA and personal data for direct-to-consumer genetic testing, mostly to map their ancestry and find relatives. Since 2020, at least two genetic genealogy firms have been hacked and at least one had its genomic data leaked. Yet when discussing future risks of genetic technology, the security policy community has largely focused on spectacular scenarios of genetically tailored bioweapons or artificial intelligence (AI) engineered superbugs. A more imminent weaponization concern is more straightforward: the risk that nefarious actors use the genetic techniques ... to frame, defame, or even assassinate targets. A Russian parliamentary report from 2023 claimed that “by using foreign biological facilities, the United States can collect and study pathogens that can infect a specific genotype of humans.” Designer bioweapons, if ever successfully developed, produced, and tested, would indeed pose a major threat. Unscrupulous actors with access to DNA synthesis infrastructure could ... frame someone for a crime such as murder, for example, by using DNA that synthetically reproduces the DNA regions used in forensic crime analysis. The research and policy communities must dedicate resources not simply to dystopian, low-probability threats like AI designed bioweapons, but also to gray zone genomics and smaller-scale, but higher probability, scenarios for misuse.

Note: For more, read our concise summaries of news articles on corruption in biotech.

Negative or fear-framed coverage of AI in mainstream media tends to outnumber positive framings. The emphasis on the negative in artificial intelligence risks overshadowing what could go right — both in the future as this technology continues to develop and right now. AlphaFold, which was developed by the Google-owned AI company DeepMind, is an AI model that predicts the 3D structures of proteins based solely on their amino acid sequences. That’s important because scientists need to predict the shape of protein to better understand how it might function and how it might be used in products like drugs. By speeding up a basic part of biomedical research, AlphaFold has already managed to meaningfully accelerate drug development in everything from Huntington’s disease to antibiotic resistance. A timely warning about a natural disaster can mean the difference between life and death. That is why Google Flood Hub is so important. An open-access, AI-driven river-flood early warning system, Flood Hub provides seven-day flood forecasts for 700 million people in 100 countries. It works by marrying a global hydrology model that can forecast river levels even in basins that lack physical flood gauges with an inundation model that converts those predicted levels into high-resolution flood maps. This allows villagers to see exactly what roads or fields might end up underwater. Flood Hub ... is one of the clearest examples of how AI can be used for good.

Note: Explore more positive stories like this on technology for good.

From facial recognition to predictive analytics to the rise of increasingly convincing deepfakes and other synthetic video, new technologies are emerging faster than agencies, lawmakers, or watchdog groups can keep up. Take New Orleans, where, for the past two years, police officers have quietly received real-time alerts from a private network of AI-equipped cameras, flagging the whereabouts of people on wanted lists. In 2022, City Council members attempted to put guardrails on the use of facial recognition. But those guidelines assume it's the police doing the searching. New Orleans police have hundreds of cameras, but the alerts in question came from a separate system: a network of 200 cameras equipped with facial recognition and installed by residents and businesses on private property, feeding video to a nonprofit called Project NOLA. Police officers who downloaded the group's app then received notifications when someone on a wanted list was detected on the camera network, along with a location. That has civil liberties groups and defense attorneys in Louisiana frustrated. “When you make this a private entity, all those guardrails that are supposed to be in place for law enforcement and prosecution are no longer there, and we don’t have the tools to ... hold people accountable,” Danny Engelberg, New Orleans’ chief public defender, [said]. Another way departments can skirt facial recognition rules is to use AI analysis that doesn’t technically rely on faces.

Note: Learn about all the high-tech tools police use to surveil protestors. For more along these lines, read our concise summaries of news articles on AI and police corruption.

Four top tech execs from OpenAI, Meta, and Palantir have just joined the US Army. The Army Reserve has commissioned these senior tech leaders to serve as midlevel officers, skipping tradition to pursue transformation. The newcomers won't attend any current version of the military's most basic and ingrained rite of passage— boot camp. Instead, they'll be ushered in through express training that Army leaders are still hashing out, Col. Dave Butler ... said. The execs — Shyam Sankar, the chief technology officer of Palantir; Andrew Bosworth, the chief technology officer of Meta; Kevin Weil, the chief product officer at OpenAI; and Bob McGrew, an advisor at Thinking Machines Lab who was formerly the chief research officer for OpenAI — are joining the Army as lieutenant colonels. The name of their unit, "Detachment 201," is named for the "201" status code generated when a new resource is created for Hypertext Transfer Protocols in internet coding, Butler explained. "In this role they will work on targeted projects to help guide rapid and scalable tech solutions to complex problems," read the Army press release. "By bringing private-sector know-how into uniform, Det. 201 is supercharging efforts like the Army Transformation Initiative, which aims to make the force leaner, smarter, and more lethal." Lethality, a vague Pentagon buzzword, has been at the heart of the massive modernization and transformation effort the Army is undergoing.

Note: For more along these lines, read our concise summaries of news articles on Big Tech and military corruption.

Palantir has long been connected to government surveillance. It was founded in part with CIA money, it has served as an Immigration and Customs Enforcement (ICE) contractor since 2011, and it's been used for everything from local law enforcement to COVID-19 efforts. But the prominence of Palantir tools in federal agencies seems to be growing under President Trump. "The company has received more than $113 million in federal government spending since Mr. Trump took office, according to public records, including additional funds from existing contracts as well as new contracts with the Department of Homeland Security and the Pentagon," reports The New York Times, noting that this figure "does not include a $795 million contract that the Department of Defense awarded the company last week, which has not been spent." Palantir technology has largely been used by the military, the intelligence agencies, the immigration enforcers, and the police. But its uses could be expanding. Representatives of Palantir are also speaking to at least two other agencies—the Social Security Administration and the Internal Revenue Service. Along with the Trump administration's efforts to share more data across federal agencies, this signals that Palantir's huge data analysis capabilities could wind up being wielded against all Americans. Right now, the Trump administration is using Palantir tools for immigration enforcement, but those tools could easily be applied to other ... targets.

Note: Read about Palantir's recent, first-ever AI warfare conference. For more along these lines, read our concise summaries of news articles on Big Tech and intelligence agency corruption.

If there is one thing that Ilya Sutskever knows, it is the opportunities—and risks—that stem from the advent of artificial intelligence. An AI safety researcher and one of the top minds in the field, he served for years as the chief scientist of OpenAI. There he had the explicit goal of creating deep learning neural networks so advanced they would one day be able to think and reason just as well as, if not better than, any human. Artificial general intelligence, or simply AGI, is the official term for that goal. According to excerpts published by The Atlantic ... part of those plans included a doomsday shelter for OpenAI researchers. “We’re definitely going to build a bunker before we release AGI,” Sutskever told his team in 2023. Sutskever reasoned his fellow scientists would require protection at that point, since the technology was too powerful for it not to become an object of intense desire for governments globally. “Of course, it’s going to be optional whether you want to get into the bunker,” he assured fellow OpenAI scientists. Sutskever knows better than most what the awesome capabilities of AI are. He was part of an elite trio behind the 2012 creation of AlexNet, often dubbed by experts as the Big Bang of AI. Recruited by Elon Musk personally to join OpenAI three years later, he would go on to lead its efforts to develop AGI. But the launch of its ChatGPT bot accidentally derailed his plans by unleashing a funding gold rush the safety-minded Sutskever could no longer control.

Note: Watch a conversation on the big picture of emerging technology with Collective Evolution founder Joe Martino and WTK team members Amber Yang and Mark Bailey. For more along these lines, read our concise summaries of news articles on AI.

The US military may soon have an army of faceless suicide bombers at their disposal, as an American defense contractor has revealed their newest war-fighting drone. AeroVironment unveiled the Red Dragon in a video on their YouTube page, the first in a new line of 'one-way attack drones.' This new suicide drone can reach speeds up to 100 mph and can travel nearly 250 miles. The new drone takes just 10 minutes to set up and launch and weighs just 45 pounds. Once the small tripod the Red Dragon takes off from is set up, AeroVironment said soldiers would be able to launch up to five per minute. Since the suicide robot can choose its own target in the air, the US military may soon be taking life-and-death decisions out of the hands of humans. Once airborne, its AVACORE software architecture functions as the drone's brain, managing all its systems and enabling quick customization. Red Dragon's SPOTR-Edge perception system acts like smart eyes, using AI to find and identify targets independently. Simply put, the US military will soon have swarms of bombs with brains that don't land until they've chosen a target and crash into it. Despite Red Dragon's ability to choose a target with 'limited operator involvement,' the Department of Defense (DoD) has said it's against the military's policy to allow such a thing to happen. The DoD updated its own directives to mandate that 'autonomous and semi-autonomous weapon systems' always have the built-in ability to allow humans to control the device.

Note: Drones create more terrorists than they kill. For more, read our concise summaries of news articles on warfare technology and Big Tech.

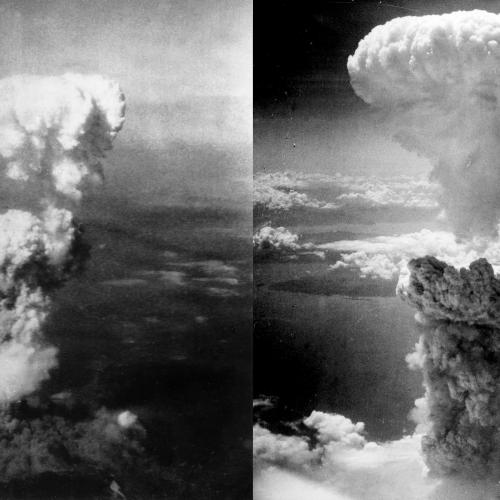

In 2003 [Alexander Karp] – together with Peter Thiel and three others – founded a secretive tech company called Palantir. And some of the initial funding came from the investment arm of – wait for it – the CIA! The lesson that Karp and his co-author draw [in their book The Technological Republic: Hard Power, Soft Belief and the Future of the West] is that “a more intimate collaboration between the state and the technology sector, and a closer alignment of vision between the two, will be required if the United States and its allies are to maintain an advantage that will constrain our adversaries over the longer term. The preconditions for a durable peace often come only from a credible threat of war.” Or, to put it more dramatically, maybe the arrival of AI makes this our “Oppenheimer moment”. For those of us who have for decades been critical of tech companies, and who thought that the future for liberal democracy required that they be brought under democratic control, it’s an unsettling moment. If the AI technology that giant corporations largely own and control becomes an essential part of the national security apparatus, what happens to our concerns about fairness, diversity, equity and justice as these technologies are also deployed in “civilian” life? For some campaigners and critics, the reconceptualisation of AI as essential technology for national security will seem like an unmitigated disaster – Big Brother on steroids, with resistance being futile, if not criminal.

Note: Learn more about emerging warfare technology in our comprehensive Military-Intelligence Corruption Information Center. For more, read our concise summaries of news articles on AI and intelligence agency corruption.

Before signing its lucrative and controversial Project Nimbus deal with Israel, Google knew it couldn’t control what the nation and its military would do with the powerful cloud-computing technology, a confidential internal report obtained by The Intercept reveals. The report makes explicit the extent to which the tech giant understood the risk of providing state-of-the-art cloud and machine learning tools to a nation long accused of systemic human rights violations. Not only would Google be unable to fully monitor or prevent Israel from using its software to harm Palestinians, but the report also notes that the contract could obligate Google to stonewall criminal investigations by other nations into Israel’s use of its technology. And it would require close collaboration with the Israeli security establishment — including joint drills and intelligence sharing — that was unprecedented in Google’s deals with other nations. The rarely discussed question of legal culpability has grown in significance as Israel enters the third year of what has widely been acknowledged as a genocide in Gaza — with shareholders pressing the company to conduct due diligence on whether its technology contributes to human rights abuses. Google doesn’t furnish weapons to the military, but it provides computing services that allow the military to function — its ultimate function being, of course, the lethal use of those weapons. Under international law, only countries, not corporations, have binding human rights obligations.

Note: For more along these lines, read our concise summaries of news articles on AI and government corruption.

In recent years, Israeli security officials have boasted of a “ChatGPT-like” arsenal used to monitor social media users for supporting or inciting terrorism. It was released in full force after Hamas’s bloody attack on October 7. Right-wing activists and politicians instructed police forces to arrest hundreds of Palestinians ... for social media-related offenses. Many had engaged in relatively low-level political speech, like posting verses from the Quran on WhatsApp. Hundreds of students with various legal statuses have been threatened with deportation on similar grounds in the U.S. this year. Recent high-profile cases have targeted those associated with student-led dissent against the Israeli military’s policies in Gaza. In some instances, the State Department has relied on informants, blacklists, and technology as simple as a screenshot. But the U.S. is in the process of activating a suite of algorithmic surveillance tools Israeli authorities have also used to monitor and criminalize online speech. In March, Secretary of State Marco Rubio announced the State Department was launching an AI-powered “Catch and Revoke” initiative to accelerate the cancellation of student visas. Algorithms would collect data from social media profiles, news outlets, and doxing sites to enforce the January 20 executive order targeting foreign nationals who threaten to “overthrow or replace the culture on which our constitutional Republic stands.”

Note: For more along these lines, read our concise summaries of news articles on AI and the erosion of civil liberties.

2,500 US service members from the 15th Marine Expeditionary Unit [tested] a leading AI tool the Pentagon has been funding. The generative AI tools they used were built by the defense-tech company Vannevar Labs, which in November was granted a production contract worth up to $99 million by the Pentagon’s startup-oriented Defense Innovation Unit. The company, founded in 2019 by veterans of the CIA and US intelligence community, joins the likes of Palantir, Anduril, and Scale AI as a major beneficiary of the US military’s embrace of artificial intelligence. In December, the Pentagon said it will spend $100 million in the next two years on pilots specifically for generative AI applications. In addition to Vannevar, it’s also turning to Microsoft and Palantir, which are working together on AI models that would make use of classified data. People outside the Pentagon are warning about the potential risks of this plan, including Heidy Khlaaf ... at the AI Now Institute. She says this rush to incorporate generative AI into military decision-making ignores more foundational flaws of the technology: “We’re already aware of how LLMs are highly inaccurate, especially in the context of safety-critical applications that require precision.” Khlaaf adds that even if humans are “double-checking” the work of AI, there's little reason to think they're capable of catching every mistake. “‘Human-in-the-loop’ is not always a meaningful mitigation,” she says.

Note: For more, read our concise summaries of news articles on warfare technology and Big Tech.

Important Note: Explore our full index to revealing excerpts of key major media news stories on several dozen engaging topics. And don't miss amazing excerpts from 20 of the most revealing news articles ever published.